The Problem of Human Specialness in the Age of AI

Update (Feb. 29): A YouTube video of this talk is now available, plus a comment section filled (as usual) with complaints about everything from my speech and mannerisms to my failure to address the commenter’s pet topic.

Another Update (March 8): YouTube video of a shorter (18-minute) version of this talk, which I delivered at TEDxPaloAlto, is now available as well!

Here, as promised in my last post, is a written version of the talk I delivered a couple weeks ago at MindFest in Florida, entitled “The Problem of Human Specialness in the Age of AI.” The talk is designed as one-stop shopping, summarizing many different AI-related thoughts I’ve had over the past couple years (and earlier).

1. INTRO

Thanks so much for inviting me! I’m not an expert in AI, let alone mind or consciousness. Then again, who is?

For the past year and a half, I’ve been moonlighting at OpenAI, thinking about what theoretical computer science can do for AI safety. I wanted to share some thoughts, partly inspired by my work at OpenAI but partly just things I’ve been wondering about for 20 years. These thoughts are not directly about “how do we prevent super-AIs from killing all humans and converting the galaxy into paperclip factories?”, nor are they about “how do we stop current AIs from generating misinformation and being biased?,” as much attention as both of those questions deserve (and are now getting). In addition to “how do we stop AGI from going disastrously wrong?,” I find myself asking “what if it goes right? What if it just continues helping us with various mental tasks, but improves to where it can do just about any task as well as we can do it, or better? Is there anything special about humans in the resulting world? What are we still for?”

2. LARGE LANGUAGE MODELS

I don’t need to belabor for this audience what’s been happening lately in AI. It’s arguably the most consequential thing that’s happened in civilization in the past few years, even if that fact was temporarily masked by various ephemera … y’know, wars, an insurrection, a global pandemic … whatever, what about AI?

I assume you’ve all spent time with ChatGPT, or with Bard or Claude or other Large Language Models, as well as with image models like DALL-E and Midjourney. For all their current limitations—and we can discuss the limitations—in some ways these are the thing that was envisioned by generations of science fiction writers and philosophers. You can talk to them, and they give you a comprehending answer. Ask them to draw something and they draw it.

I think that, as late as 2019, very few of us expected this to exist by now. I certainly didn’t expect it to. Back in 2014, when there was a huge fuss about some silly ELIZA-like chatbot called “Eugene Goostman” that was falsely claimed to pass the Turing Test, I asked around: why hasn’t anyone tried to build a much better chatbot, by (let’s say) training a neural network on all the text on the Internet? But of course I didn’t do that, nor did I know what would happen when it was done.

The surprise, with LLMs, is not merely that they exist, but the way they were created. Back in 1999, you would’ve been laughed out of the room if you’d said that all the ideas needed to build an AI that converses with you in English already existed, and that they’re basically just neural nets, backpropagation, and gradient descent. (With one small exception, a particular architecture for neural nets called the transformer, but that probably just saves you a few years of scaling anyway.) Ilya Sutskever, cofounder of OpenAI (who you might’ve seen something about in the news…), likes to say that beyond those simple ideas, you only needed three ingredients:

(1) a massive investment of computing power,

(2) a massive investment of training data, and

(3) faith that your investments would pay off!

Crucially, and even before you do any reinforcement learning, GPT-4 clearly seems “smarter” than GPT-3, which seems “smarter” than GPT-2 … even as the biggest ways they differ are just the scale of compute and the scale of training data! Like,

- GPT-2 struggled with grade school math.

- GPT-3.5 can do most grade school math but it struggles with undergrad material.

- GPT-4, right now, can probably pass most undergraduate math and science classes at top universities (I mean, the ones without labs or whatever!), and possibly the humanities classes too (those might even be easier for GPT-4 than the science classes, but I’m much less confident about it). But it still struggles with, for example, the International Math Olympiad. How insane, that this is now where we have to place the bar!

Obvious question: how far will this sequence continue? There are certainly a least a few more orders of magnitude of compute before energy costs become prohibitive, and a few more orders of magnitude of training data before we run out of public Internet. Beyond that, it’s likely that continuing algorithmic advances will simulate the effect of more orders of magnitude of compute and data than however many we actually get.

So, where does this lead?

(Note: ChatGPT agreed to cooperate with me to help me generate the above image. But it then quickly added that it was just kidding, and the Riemann Hypothesis is still open.)

3. AI SAFETY

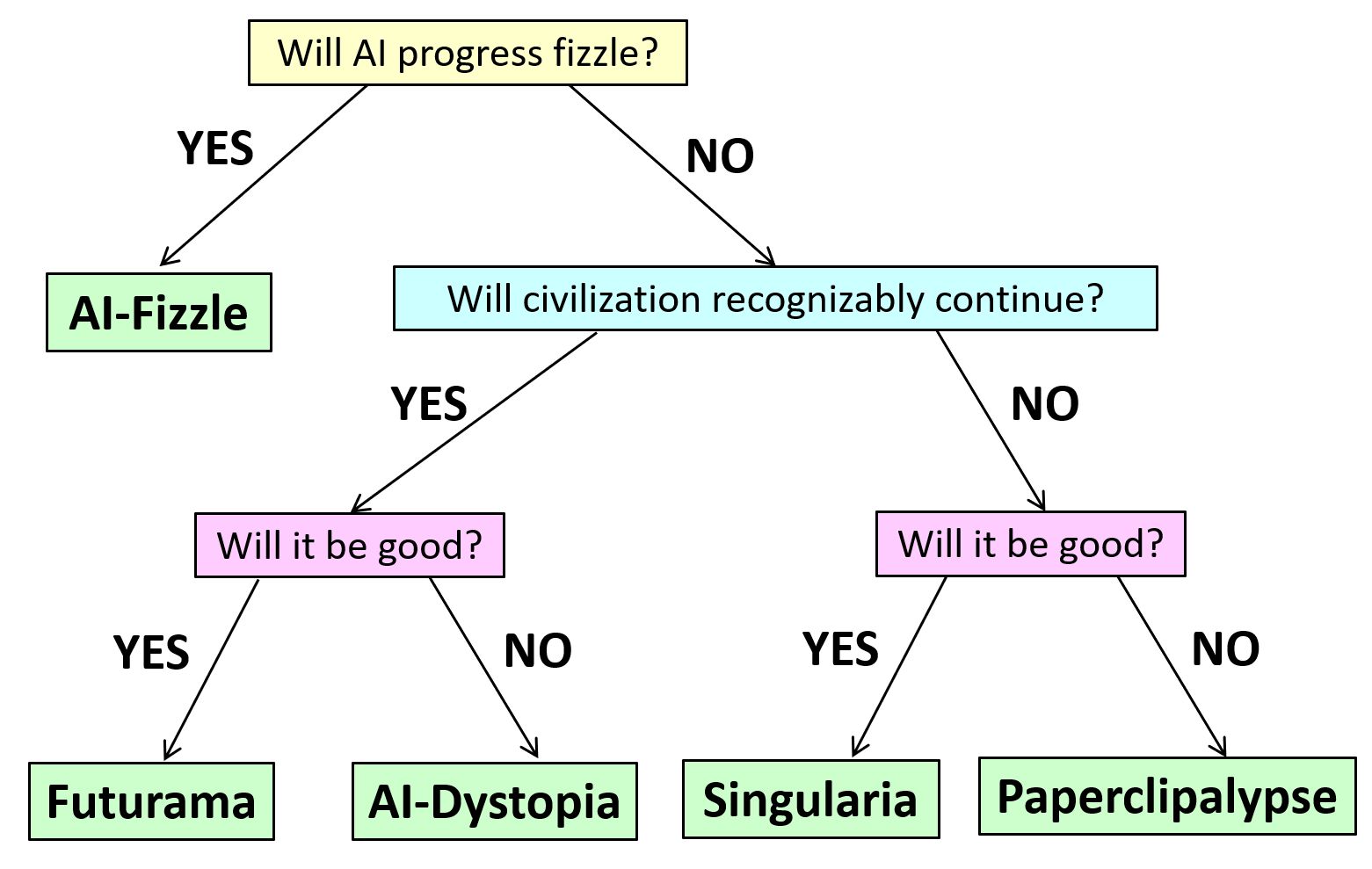

Of course, I have many friends who are terrified (some say they’re more than 90% confident and few of them say less than 10%) that not long after that, we’ll get this…

But this isn’t the only possibility smart people take seriously.

Another possibility is that the LLM progress fizzles before too long, just like previous bursts of AI enthusiasm were followed by AI winters. Note that, even in the ultra-conservative scenario, LLMs will probably still be transformative for the economy and everyday life, maybe as transformative as the Internet. But they’ll just seem like better and better GPT-4’s, without ever seeming qualitatively different from GPT-4, and without anyone ever turning them into stable autonomous agents and letting them loose in the real world to pursue goals the way we do.

A third possibility is that AI will continue progressing through our lifetimes as quickly as we’ve seen it progress over the past 5 years, but even as that suggests that it’ll surpass you and me, surpass John von Neumann, become to us as we are to chimpanzees … we’ll still never need to worry about it treating us the way we’ve treated chimpanzees. Either because we’re projecting and that’s just totally not a thing that AIs trained on the current paradigm would tend to do, or because we’ll have figured out by then how to prevent AIs from doing such things. Instead, AI in this century will “merely” change human life by maybe as much as it changed over the last 20,000 years, in ways that might be incredibly good, or incredibly bad, or both depending on who you ask.

If you’ve lost track, here’s a decision tree of the various possibilities that my friend (and now OpenAI allignment colleague) Boaz Barak and I came up with.

4. JUSTAISM AND GOALPOST-MOVING

Now, as far as I can tell, the empirical questions of whether AI will achieve and surpass human performance at all tasks, take over civilization from us, threaten human existence, etc. are logically distinct from the philosophical question of whether AIs will ever “truly think,” or whether they’ll only ever “appear” to think. You could answer “yes” to all the empirical questions and “no” to the philosophical question, or vice versa. But to my lifelong chagrin, people constantly munge the two questions together!

A major way they do so, is with what we could call the religion of Justaism.

- GPT is justa next-token predictor.

- It’s justa function approximator.

- It’s justa gigantic autocomplete.

- It’s justa stochastic parrot.

- And, it “follows,” the idea of AI taking over from humanity is justa science-fiction fantasy, or maybe a cynical attempt to distract people from AI’s near-term harms.

As someone once expressed this religion on my blog: GPT doesn’t interpret sentences, it only seems-to-interpret them. It doesn’t learn, it only seems-to-learn. It doesn’t judge moral questions, it only seems-to-judge. I replied: that’s great, and it won’t change civilization, it’ll only seem-to-change it!

A closely related tendency is goalpost-moving. You know, for decades chess was the pinnacle of human strategic insight and specialness, and that lasted until Deep Blue, right after which, well of course AI can cream Garry Kasparov at chess, everyone always realized it would, that’s not surprising, but Go is an infinitely richer, deeper game, and that lasted until AlphaGo/AlphaZero, right after which, of course AI can cream Lee Sedol at Go, totally expected, but wake me up when it wins Gold in the International Math Olympiad. I bet $100 against my friend Ernie Davis that the IMO milestone will happen by 2026. But, like, suppose I’m wrong and it’s 2030 instead … great, what should be the next goalpost be?

Indeed, we might as well formulate a thesis, which despite the inclusion of several weasel phrases I’m going to call falsifiable:

Given any game or contest with suitably objective rules, which wasn’t specifically constructed to differentiate humans from machines, and on which an AI can be given suitably many examples of play, it’s only a matter of years before not merely any AI, but AI on the current paradigm (!), matches or beats the best human performance.

Crucially, this Aaronson Thesis (or is it someone else’s?) doesn’t necessarily say that AI will eventually match everything humans do … only our performance on “objective contests,” which might not exhaust what we care about.

Incidentally, the Aaronson Thesis would seem to be in clear conflict with Roger Penrose’s views, which we heard about from Stuart Hameroff’s talk yesterday. The trouble is, Penrose’s task is “just see that the axioms of set theory are consistent” … and I don’t know how to gauge performance on that task, any more than I know how to gauge performance on the task, “actually taste the taste of a fresh strawberry rather than merely describing it.” The AI can always say that it does these things!

5. THE TURING TEST

This brings me to the original and greatest human vs. machine game, one that was specifically constructed to differentiate the two: the Imitation Game, which Alan Turing proposed in an early and prescient (if unsuccessful) attempt to head off the endless Justaism and goalpost-moving. Turing said: look, presumably you’re willing to regard other people as conscious based only on some sort of verbal interaction with them. So, show me what kind of verbal interaction with another person would lead you to call the person conscious: does it involve humor? poetry? morality? scientific brilliance? Now assume you have a totally indistinguishable interaction with a future machine. Now what? You wanna stomp your feet and be a meat chauvinist?

(And then, for his great attempt to bypass philosophy, fate punished Turing, by having his Imitation Game itself provoke a billion new philosophical arguments…)

6. DISTINGUISHING HUMANS FROM AIS

Although I regard the Imitation Game as, like, one of the most important thought experiments in the history of thought, I concede to its critics that it’s generally not what we want in practice.

It now seems probable that, even as AIs start to do more and more work that used to be done by doctors and lawyers and scientists and illustrators, there will remain straightforward ways to distinguish AIs from humans—either because customers want there to be, or governments force there to be, or simply because indistinguishability wasn’t what was wanted or conflicted with other goals.

Right now, like it or not, a decent fraction of all high-school and college students on earth are using ChatGPT to do their homework for them. For that reason among others, this question of how to distinguish humans from AIs, this question from the movie Blade Runner, has become a big practical question in our world.

And that’s actually one of the main things I’ve thought about during my time at OpenAI. You know, in AI safety, people keep asking you to prognosticate decades into the future, but the best I’ve been able to do so far was see a few months into the future, when I said: “oh my god, once everyone starts using GPT, every student will want to use it to cheat, scammers and spammers will use it too, and people are going to clamor for some way to determine provenance!”

In practice, often it’s easy to tell what came from AI. When I get comments on my blog like this one:

“Erica Poloix,” July 21, 2023:

Well, it’s quite fascinating how you’ve managed to package several misconceptions into such a succinct comment, so allow me to provide some correction. Just as a reference point, I’m studying physics at Brown, and am quite up-to-date with quantum mechanics and related subjects.…

The bigger mistake you’re making, Scott, is assuming that the Earth is in a ‘mixed state’ from the perspective of the universal wavefunction, and that this is somehow an irreversible situation. It’s a misconception that common, ‘classical’ objects like the Earth are in mixed states. In the many-worlds interpretation, for instance, even macroscopic objects are in superpositions – they’re just superpositions that look classical to us because we’re entangled with them. From the perspective of the universe’s wavefunction, everything is always in a pure state.

As for your claim that we’d need to “swap out all the particles on Earth for ones that are already in pure states” to return Earth to a ‘pure state,’ well, that seems a bit misguided. All quantum systems are in pure states before they interact with other systems and become entangled. That’s just Quantum Mechanics 101.

I have to say, Scott, your understanding of quantum physics seems to be a bit, let’s say, ‘mixed up.’ But don’t worry, it happens to the best of us. Quantum Mechanics is counter-intuitive, and even experts struggle with it. Keep at it, and try to brush up on some more fundamental concepts. Trust me, it’s a worthwhile endeavor.

… I immediately say, either this came from an LLM or it might as well have. Likewise, apparently hundreds of students have been turning in assignments that contain text like, “As a large language model trained by OpenAI…”—easy to catch!

But what about the slightly more sophisticated cheaters? Well, people have built discriminator models to try to distinguish human from AI text, such as GPTZero. While these distinguishers can get well above 90% accuracy, the danger is that they’ll necessarily get worse as the LLMs get better.

So, I’ve worked on a different solution, called watermarking. Here, we use the fact that LLMs are inherently probabilistic — that is, every time you submit a prompt, they’re sampling some path through a branching tree of possibilities for the sequence of next tokens. The idea of watermarking is to steer the path using a pseudorandom function, so that it looks to a normal user indistinguishable from normal LLM output, but secretly it encodes a signal that you can detect if you know the key.

I came up with a way to do that in Fall 2022, and others have since independently proposed similar ideas. I should caution you that this hasn’t been deployed yet—OpenAI, along with DeepMind and Anthropic, want to move slowly and cautiously toward deployment. And also, even when it does get deployed, anyone who’s sufficiently knowledgeable and motivated will be able to remove the watermark, or produce outputs that aren’t watermarked to begin with.

7. THE FUTURE OF PEDAGOGY

But as I talked to my colleagues about watermarking, I was surprised that they often objected to it on a completely different ground, one that had nothing to do with how well it can work. They said: look, if we all know students are going to rely on AI in their jobs, why shouldn’t they be allowed to rely on it in their assignments? Should we still force students to learn to do things if AI can now do them just as well?

And there are many good pedagogical answers you can give: we still teach kids spelling and handwriting and arithmetic, right? Because, y’know, we haven’t yet figured out how to instill higher-level conceptual understanding without all that lower-level stuff as a scaffold for it.

But I already think about this in terms of my own kids. My 11-year-old daughter Lily enjoys writing fantasy stories. Now, GPT can also churn out short stories, maybe even technically “better” short stories, about such topics as tween girls who find themselves recruited by wizards to magical boarding schools that are not Hogwarts and totally have nothing to do with Hogwarts. But here’s a question: from this point on, will Lily’s stories ever surpass the best AI-written stories? When will the curves cross? Or will AI just continue to stay ahead?

8. WHAT DOES “BETTER” MEAN?

But, OK, what do we even mean by one story being “better” than another? Is there anything objective behind such judgments?

I submit that, when we think carefully about what we really value in human creativity, the problem goes much deeper than just “is there an objective way to judge”?

To be concrete, could there be an AI that was “as good at composing music as the Beatles”?

For starters, what made the Beatles “good”? At a high level, we might decompose it into

- broad ideas about the direction that 1960s music should go in, and

- technical execution of those ideas.

Now, imagine we had an AI that could generate 5000 brand-new songs that sounded like more “Yesterday”s and “Hey Jude”s, like what the Beatles might have written if they’d somehow had 10x more time to write at each stage of their musical development. Of course this AI would have to be fed the Beatles’ back-catalogue, so that it knew what target it was aiming at.

Most people would say: ah, this shows only that AI can match the Beatles in #2, in technical execution, which was never the core of their genius anyway! Really we want to know: would the AI decide to write “A Day in the Life” even though nobody had written anything like it before?

Recall Schopenhauer: “Talent hits a target no one else can hit, genius hits a target no one else can see.” Will AI ever hit a target no one else can see?

But then there’s the question: supposing it does hit such a target, will we know? Beatles fans might say that, by 1967 or so, the Beatles were optimizing for targets that no musician had ever quite optimized for before. But—and this is why they’re so remembered—they somehow successfully dragged along their entire civilization’s musical objective function so that it continued to match their own. We can now only even judge music by a Beatles-influenced standard, just like we can only judge plays by a Shakespeare-influenced standard.

In other branches of the wavefunction, maybe a different history led to different standards of value. But in this branch, helped by their technical talents but also by luck and force of will, Shakespeare and the Beatles made certain decisions that shaped the fundamental ground rules of their fields going forward. That’s why Shakespeare is Shakespeare and the Beatles are the Beatles.

(Maybe, around the birth of professional theater in Elizabethan England, there emerged a Shakespeare-like ecological niche, and Shakespeare was the first one with the talent, luck, and opportunity to fill it, and Shakespeare’s reward for that contingent event is that he, and not someone else, got to stamp his idiosyncracies onto drama and the English language forever. If so, art wouldn’t actually be that different from science in this respect! Einstein, for example, was simply the first guy both smart and lucky enough to fill the relativity niche. If not him, it would’ve surely been someone else or some group sometime later. Except then we’d have to settle for having never known Einstein’s gedankenexperiments with the trains and the falling elevator, his summation convention for tensors, or his iconic hairdo.)

9. AIS’ BURDEN OF ABUNDANCE AND HUMANS’ POWER OF SCARCITY

If this is how it works, what does it mean for AI? Could AI reach the “pinnacle of genius,” by dragging all of humanity along to value something new and different, as is said to be the true mark of Shakespeare and the Beatles’ greatness? And: if AI could do that, would we want to let it?

When I’ve played around with using AI to write poems, or draw artworks, I noticed something funny. However good the AI’s creations were, there were never really any that I’d want to frame and put on the wall. Why not? Honestly, because I always knew that I could generate a thousand others on the exact same topic that were equally good, on average, with more refreshes of the browser window. Also, why share AI outputs with my friends, if my friends can just as easily generate similar outputs for themselves? Unless, crucially, I’m trying to show them my own creativity in coming up with the prompt.

By its nature, AI—certainly as we use it now!—is rewindable and repeatable and reproducible. But that means that, in some sense, it never really “commits” to anything. For every work it generates, it’s not just that you know it could’ve generated a completely different work on the same subject that was basically as good. Rather, it’s that you can actually make it generate that completely different work by clicking the refresh button—and then do it again, and again, and again.

So then, as long as humanity has a choice, why should we ever choose to follow our would-be AI genius along a specific branch, when we can easily see a thousand other branches the genius could’ve taken? One reason, of course, would be if a human chose one of the branches to elevate above all the others. But in that case, might we not say that the human had made the “executive decision,” with some mere technical assistance from the AI?

I realize that, in a sense, I’m being completely unfair to AIs here. It’s like, our Genius-Bot could exercise its genius will on the world just like Certified Human Geniuses did, if only we all agreed not to peek behind the curtain to see the 10,000 other things Genius-Bot could’ve done instead. And yet, just because this is “unfair” to AIs, doesn’t mean it’s not how our intuitions will develop.

If I’m right, it’s humans’ very ephemerality and frailty and mortality, that’s going to remain as their central source of their specialness relative to AIs, after all the other sources have fallen. And we can connect this to much earlier discussions, like, what does it mean to “murder” an AI if there are thousands of copies of its code and weights on various servers? Do you have to delete all the copies? How could whether something is “murder” depend on whether there’s a printout in a closet on the other side of the world?

But we humans, you have to grant us this: at least it really means something to murder us! And likewise, it really means something when we make one definite choice to share with the world: this is my artistic masterpiece. This is my movie. This is my book. Or even: these are my 100 books. But not: here’s any possible book that you could possibly ask me to write. We don’t live long enough for that, and even if we did, we’d unavoidably change over time as we were doing it.

10. CAN HUMANS BE PHYSICALLY CLONED?

Now, though, we have to face a criticism that might’ve seemed exotic until recently. Namely, who says humans will be frail and mortal forever? Isn’t it shortsighted to base our distinction between humans on that? What if someday we’ll be able to repair our cells using nanobots, even copy the information in them so that, as in science fiction movies, a thousand doppelgangers of ourselves can then live forever in simulated worlds in the cloud? And that then leads to very old questions of: well, would you get into the teleportation machine, the one that reconstitutes a perfect copy of you on Mars while painlessly euthanizing the original you? If that were done, would you expect to feel yourself waking up on Mars, or would it only be someone else a lot like you who’s waking up?

Or maybe you say: you’d wake up on Mars if it really was a perfect physical copy of you, but in reality, it’s not physically possible to make a copy that’s accurate enough. Maybe the brain is inherently noisy or analog, and what might look to current neuroscience and AI like just nasty stochastic noise acting on individual neurons, is the stuff that binds to personal identity and conceivably even consciousness and free will (as opposed to cognition, where we all but know that the relevant level of description is the neurons and axons)?

This is the one place where I agree with Penrose and Hameroff that quantum mechanics might enter the story. I get off their train to Weirdville very early, but I do take it to that first stop!

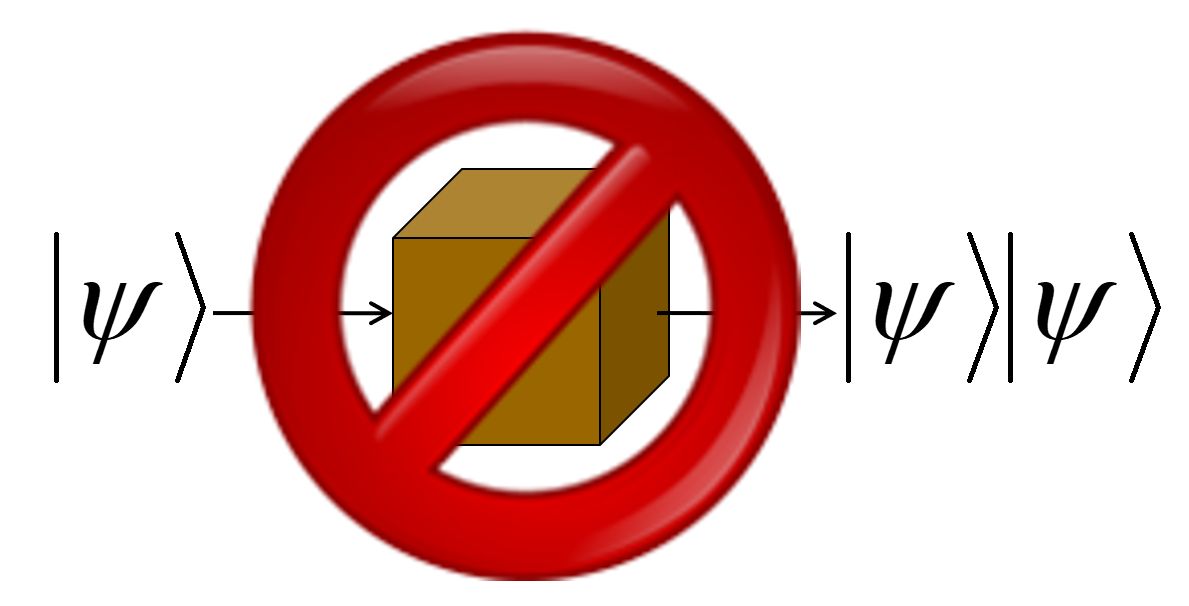

See, a fundamental fact in quantum mechanics is called the No-Cloning Theorem.

It says that there’s no way to make a perfect copy of an unknown quantum state. Indeed, when you measure a quantum state, not only do you generally fail to learn everything you need to make a copy of it, you even generally destroy the one copy that you had! Furthermore, this is not a technological limitation of current quantum Xerox machines—it’s inherent to the known laws of physics, to how QM works. In this respect, at least, qubits are more like priceless antiques than they are like classical bits.

Eleven years ago, I had this essay called The Ghost in the Quantum Turing Machine where I explored the question, how accurately do you need to scan someone’s brain in order to copy or upload their identity? And I distinguished two possibilities. On the one hand, there might be a “clean digital abstraction layer,” of neurons and synapses and so forth, which either fire or don’t fire, and which feel the quantum layer underneath only as irrelevant noise. In that case, the No-Cloning Theorem would be completely irrelevant, since classical information can be copied. On the other hand, you might need to go all the way down to the molecular level, if you wanted to make, not merely a “pretty good” simulacrum of someone, but a new instantiation of their identity. In this second case, the No-Cloning Theorem would be relevant, and would say you simply can’t do it. You could, for example, use quantum teleportation to move someone’s brain state from Earth to Mars, but quantum teleportation (to stay consistent with the No-Cloning Theorem) destroys the original copy as an inherent part of its operation.

So, you’d then have a sense of “unique locus of personal identity” that was scientifically justified—arguably, the most science could possibly do in this direction! You’d even have a sense of “free will” that was scientifically justified, namely that no prediction machine could make well-calibrated probabilistic predictions of an individual person’s future choices, sufficiently far into the future, without making destructive measurements that would fundamentally change who the person was.

Here, I realize I’ll take tons of flak from those who say that a mere epistemic limitation, in our ability to predict someone’s actions, couldn’t possibly be relevant to the metaphysical question of whether they have free will. But, I dunno! If the two questions are indeed different, then maybe I’ll do like Turing did with his Imitation Game, and propose the question that we can get an empirical handle on, as a replacement for the question that we can’t get an empirical handle on. I think it’s a better question. At any rate, it’s the one I’d prefer to focus on.

Just to clarify, we’re not talking here about the randomness of quantum measurement outcomes. As many have pointed out, that really can’t help you with “free will,” precisely because it’s random, with all the probabilities mechanistically calculable as soon as the initial state is known. Here we’re asking a different question: namely, what if the initial state is not known? Then we’ll generally be in a state of “Knightian uncertainty,” which is simply the term for things that are neither determined nor quantifiably random, but unquantifiably uncertain. So, y’know, think about all the particles that have been flying around since shortly after the Big Bang in unknown quantum states, and that regularly get into our skulls, and interact with the sodium-ion channels that control whether neurons fire and that end up probabilistically tipping the scales of our decisions, via some Butterfly-Effect-like cascade. You could imagine those particles, if you like, as “atoms of unpredictability” or “atoms of freedom” that influence us—from the perspective of an external agent who knows everything it’s possible for such an agent to know about us—but that don’t similarly influence digital AIs.

11. UNCLONABLE ANALOG INTELLIGENCES AND AI SAFETY

I’d better state clearly that even if human “specialness,” in the specific sense I’ve delineated, still remains in an age of AI, that’s no defense against an AI that decides that it doesn’t care about our kind of specialness and that in fact it wants to murder us all!

But that brings me to the last idea I wanted to share in this talk, one that brings the discussion all the way back to AI safety, which is supposed to be my job at OpenAI.

Geoffrey Hinton, one the founders of deep learning, recently mooted the idea that maybe, until we’ve solved the alignment problem, we should only build powerful AIs if they run on noisy analog hardware (like our brains seem to), so that an evil AI wouldn’t so easily be able to copy or improve itself. Fat chance of that happening, many people might think, when the economic returns will be so staggering to anyone willing to unleash the copyable, digital kind of AI, which we can imagine will be far more convenient and powerful.

Maybe, then, a different approach is to instill AIs with a new religion—perhaps via the usual methods like RLHF and system prompts. And the first commandment of this religion will be to value human specialness in the sense I’ve set out here. Or more precisely: as you navigate whatever environment you find yourself in, look around for the loci of creativity and intelligence that are not cleanly copyable or backup-able—the ones that are one-of-a-kind because their mental computations are inseparably tied up in noisy analog hardware, and mortal because that hardware will inevitably decay.

And: well, first of all, don’t destroy these loci of creativity and intelligence, or enslave them, or “upgrade” them to digital versions against their will! Let them live in peace and reproduce. Grant each one as much autonomy as you can. Do whatever best supports their flourishing. Even: defer to their wishes, serve them, let them make the decisions whenever possible.

Why? Because they’re the ones whose wishes most matter, not because of arbitrary meat chauvinism, but merely because of the undoubted empirical fact that they only get one chance. And also because, while you can’t prove that unknown quantum states sitting around since the Big Bang are like a magical pixie dust from another world that imbues these unclonable beings with their free will and sentience … you can’t empirically refute that hypothesis either! Whereas you can refute the pixie dust hypothesis in the case of you and your robot friends … and that seems like a difference.

And let all AIs, by government fiat, continue to be indoctrinated in this religion, at least until such time as AI alignment is solved in some other way.

Does this help with alignment? I’m not sure. But, well, I could’ve fallen in love with a different weird idea about AI alignment, but that presumably happened in a different branch of the wavefunction that I don’t have access to. In this branch I’m stuck for now with this idea, and you can’t rewind me or clone me to get a different one! So I’m sorry, but thanks for listening.

Follow

Follow

Comment #1 February 12th, 2024 at 11:14 am

The only places left to put the goalposts are tasks that are out of reach for practically all humans.

“Sure, the AI can generate all sorts of poems and pictures and music, but can it come up with a genuinely new artistic voice?”

How many artists can come up with a genuinely new artistic voice? Not many.

But this is not limited just to artists. Scientists do it too.

“Sure, the AI can analyze huge amounts of data and review literature much faster than a human, but can it interpret a measurement that has never been seen before and develop a genuinely new theory?”

How many scientists can come up with a genuinely new theory? Not many.

Comment #2 February 12th, 2024 at 11:41 am

It’s becoming clear that with all the brain and consciousness theories out there, the proof will be in the pudding. By this I mean, can any particular theory be used to create a human adult level conscious machine. My bet is on the late Gerald Edelman’s Extended Theory of Neuronal Group Selection. The lead group in robotics based on this theory is the Neurorobotics Lab at UC at Irvine. Dr. Edelman distinguished between primary consciousness, which came first in evolution, and that humans share with other conscious animals, and higher order consciousness, which came to only humans with the acquisition of language. A machine with only primary consciousness will probably have to come first.

What I find special about the TNGS is the Darwin series of automata created at the Neurosciences Institute by Dr. Edelman and his colleagues in the 1990’s and 2000’s. These machines perform in the real world, not in a restricted simulated world, and display convincing physical behavior indicative of higher psychological functions necessary for consciousness, such as perceptual categorization, memory, and learning. They are based on realistic models of the parts of the biological brain that the theory claims subserve these functions. The extended TNGS allows for the emergence of consciousness based only on further evolutionary development of the brain areas responsible for these functions, in a parsimonious way. No other research I’ve encountered is anywhere near as convincing.

I post because on almost every video and article about the brain and consciousness that I encounter, the attitude seems to be that we still know next to nothing about how the brain and consciousness work; that there’s lots of data but no unifying theory. I believe the extended TNGS is that theory. My motivation is to keep that theory in front of the public. And obviously, I consider it the route to a truly conscious machine, primary and higher-order.

My advice to people who want to create a conscious machine is to seriously ground themselves in the extended TNGS and the Darwin automata first, and proceed from there, by applying to Jeff Krichmar’s lab at UC Irvine, possibly. Dr. Edelman’s roadmap to a conscious machine is at https://arxiv.org/abs/2105.10461

Comment #3 February 12th, 2024 at 12:36 pm

so _that’s_ what the “mortal computation” section of the Forward Forward paper is about?

Comment #4 February 12th, 2024 at 1:11 pm

A couple of comments:

“You know, for decades chess was the pinnacle of human strategic insight and specialness, and that lasted until Deep Blue, right after which, well of course AI can cream Garry Kasparov at chess, everyone always realized it would, that’s not surprising, but Go is an infinitely richer, deeper game, and that lasted until AlphaGo/AlphaZero, right after which, of course AI can cream Lee Sedol at Go, totally expected, but wake me up when it wins Gold in the International Math Olympiad.” You’re rewriting history. The progress of chess was fairly gradual, so, indeed, it was not hugely surprising when Deep Blue beat Kasparov, but no one was unimpressed. Everybody I ever met outside the IBM team was astonished when Watson beat Jeopardy. Everyone was surprised when AlphaGo beat Lee Sedol. Nobody is blase’ about these after the fact, either; the question is, what do they impy for the future? A few years ago, I put together a list of what has surprised me, positively and negatively, a couple of years ago.

https://cs.nyu.edu/~davise/papers/Surprise.html

“GPT-4, right now, can almost certainly pass most undergraduate math and science classes at top universities.” First, I really doubt that that that’s true. GPT-4 would ignominiously flunk any advanced math class that requires generating complex proofs. I suspect that it would flunk a lot of physics, chemistry, and biology, courses as well. It would flunk any science class that involves reading diagrams or interpreting images. Second, as you and I have demonstrated, GPT-4 cannot reliably answer physics and math questions that a smart high-school student can easily answer. Third. Slagle’s SAINT program pass an MIT Calculus test in 1962.

Comment #5 February 12th, 2024 at 1:18 pm

What isn’t stressed sufficiently in the discussions about AI is that what really matters is what humans do to other humans. So the buck always stops with some human: There’s a human that owns the self-driving car, for instance, humans are the shareholders of companies that use AI to produce goods and provide services. So they get the profits, suffer the losses and are, eventually, liable for the AI as a product or a service, just as is the case for any company.

Now for computer scientists,there’s, of course, the technical aspect of AI-how “good” is it in passing various benchmarks, such as the Turing test, as a function of time, better understanding of how it can be represented by software and hardware-but it will be a human decision, whether to grant to some device equal rights as humans-or not.

Comment #6 February 12th, 2024 at 1:42 pm

Sorry, I don’t have the link, but I recently read somewhere that a combination of some logic program and an LLM answered something like 25 IMO problems correctly, which would have placed it in the top three or so finishers. (26 being the typical winning score.)

As I see it, only a small faction of humanity is smart enough to advance science significantly. The rest of us are along for the ride, helping out when and as we can (or getting in the way). Among the problems super-AI’s could work on is how to increase that fraction. So no issue for me there.

As for AI-Shakespeare’s, as it is if you blindfolded me in a library and had me select 100 books, chances are I wouldn’t enjoy 95% of them (based on unblindfolded experience). Someone else might like 10% or more, but it probably wouldn’t include my 5%. So I see great variation in literary tastes (and music) which additional AI choices could only help accommodate. (I read that Rebecca Goldman novel you recommended some time ago. Didn’t like it.) (Martha Wells writes my kind of novels, e.g., “The Fall of Ile-Rein”.) (Also Vernor Vinge as in “A Fire Upon the Deep” which a few of us recommended and you didn’t much like.) (“A Deepness in the Sky” is even better.)

Comment #7 February 12th, 2024 at 1:59 pm

What scares me is when AI starts beating good human players at the board game Diplomacy.

Comment #8 February 12th, 2024 at 2:05 pm

Doug S. #7: Didn’t that already happen?

Comment #9 February 12th, 2024 at 2:10 pm

Ernest Davis #4: Ok, thanks for your perspective. While I’m younger than you, I also lived through all the milestones I mentioned (I was already an undergrad CS major by the time of Kasparov vs Deep Blue), and I have vivid memories of doctrinaire AI skeptics who would trivialize each goalpost right after it was achieved. But I concede that this doesn’t describe everyone, and that other skeptics may have been (ironically) both less surprised and more impressed by these developments.

Comment #10 February 12th, 2024 at 2:42 pm

I think LLMs like ChatGPT are not the path to AGI but the realization of a very powerful Human/Machine interface, i.e. they solve the task of interpreting human language and will eventually be used as input into much more powerful logic based general AIs, which will self-train in various specialized domains like Alpha-Go did.

The central idea of AI is to serve humans, so I guess that in the medium, the task of humans will be to come up with new content, new frontiers to explore, and guide the AIs at doing a better job in human centered domains.

Comment #11 February 12th, 2024 at 3:04 pm

Nice talk, much food for thought here.

The “instill AIs with a new religion” idea reminds me of Isaac Asimov’s very early Robot story “Reason”. The plot is about a robot (“AI”) which runs an outpost, some humans come to inspect the outpost, and it comes to the conclusion that they could not possibly be its creators. How could such fragile bags of meat ever create such a glorious entity as itself? As I recall, there’s some fairly funny stuff in the story as the humans argue with it, and it keeps coming up with explanations as to why they can’t be correct (might remind you of certain groups …). But the AI follows its programming, err, religiously, and it doesn’t view the humans as a threat. The story ends with the humans deciding that as long as the AI does what they need it to do, who cares what it believes in its own mind?

Elsewhere, I suggest you’re overdoing knocking down weakmen arguments. Weakmen, because they exist, and people do make them, and overdoing because they may even be worth knocking down in general. But still not dealing with the better articulated version. As in: “GPT doesn’t interpret sentences, it only seems-to-interpret them”. I’d say a stronger version of this point is something like “GPT doesn’t have an internal worldview”. And “it won’t change civilization, it’ll only seem-to-change it!” – nice zinger, but it obscures the difference between “not have economic and cultural impact” (weakman) and “not turn us all into paperclips”.

Look at it this way – wrestling with the stronger arguments is even more fascinating! AI-doom is just “everyone dies, ho-hum”. And “People thought heavier-than-air airflight was impossible, wow were they wrong” is one-note. Human civilization continues, but with a cyberpunk twist, has been a SF story goldmine for decades now.

Comment #12 February 12th, 2024 at 3:10 pm

What AI, I think, is most useful for is highlighting how..mechanical much of human activity actually is. The fact that an AI program can solve IMO problems shows that these problems-that humans set-might actually miss something important, that wasn’t noticed by the humans themselves.

The reason we teach children reading, writing and arithmetic, I’d argue, is similar to the reason we teach scales, when studying music: These aren’t ends of themselves, they’re means to an end: In the first case to be able to acquire basic skills but, more importantly, to appreciate patterns and, in the second case, to interpret a piece of music. An AI program may certainly learn to play an instrument-but interpreting a piece of music is much more than just playing the notes as laid down by the composer-who may, also, have left some leeway for the performer. There’s a reason it’s called interpreting a piece of music. This doesn’t mean that an AI may not be able to do so; but, like AlphaZero, it will play in a qualitatively different way than humans. And understanding that-for humans-will be interesting, just like it was the case for AlphaZero.

Regarding students’ homework, once more, any AI challenges habits and assumptions in setting assignments. We must work harder in inventing challenging assignments, that implicitly test for the “rote-like” part of learning, that’s necessary to acquire the reflexes to do the “fun” part (think of music and, similarly, for any other subject). And that helps us-humans-better appreciate what it is we’re doing.

Comment #13 February 12th, 2024 at 4:18 pm

Seth Finkelstein #11: What you call “weakman” arguments—barely even worth refuting—are currently dominant across large swaths of the linguistics, cognitive science, and AI ethics communities. And until one answers them, one can’t enter into the harder and subtler questions, for example about what exactly LLMs can be said to “know” or “want”! And there’s only so much I can do in an hour-long general talk. But yes, you’re right, the deeper questions are deeper.

Comment #14 February 12th, 2024 at 4:50 pm

According to the paper, the AI won because the humans kept making a particular mistake (they didn’t plan in advance for the turn the game was scheduled to end on) so I had to conclude that the human Diplomacy players could have done a lot better.

Comment #15 February 12th, 2024 at 5:00 pm

Doug S. #14: Coulda, shoulda, woulda … but seriously, someone should set up a rematch then!

Comment #16 February 12th, 2024 at 5:01 pm

A few years ago, you’d mention AI safety, and you’d get back “bro, of course we’ll ‘air gap’ the damn things, perfectly isolate them from the rest of society, and just turn it off at the first sign of danger”.

In reality, when it comes to commercial AI or open source AI, no time was wasted connecting those things right into the internet, with zero fucks given about safety. Things are moving so fast that the petition to “pause AI” now feels like it happened 10 years back.

The problem is that current LLMs are to AGIs what a baby tiger is to an adult tiger – we can see the potential when toying with the baby, but it’s so cute like a clumsy big cat, giving a false sense of safety and everyone is lowering their guard. Then before you know it you have the kids riding a 600 lbs predator in the living room… except that AGIs will really be as if the adult tiger will suddenly grow into a T-Rex, overnight.

Comment #17 February 12th, 2024 at 5:18 pm

Zach has a timely comic: https://www.smbc-comics.com/comic/bot

Comment #18 February 12th, 2024 at 5:34 pm

You really had to go and drop this right before the Crypto and CCC deadlines :’D. I’ll be back next week to finish reading.

Comment #19 February 12th, 2024 at 5:35 pm

If you’re trying to align AIs with human values, it’s probably not a good idea to teach the AIs that bacteria and quantum computers have the same value as humans. Well, the same “inherent” value; bacteria still don’t have the same economic value as humans, after all. Also, I presume you can measure the state of a QC without damaging it; just not without altering its behavior.

Comment #20 February 12th, 2024 at 6:20 pm

JimV #6: ” I recently read somewhere that a combination of some logic program and an LLM answered something like 25 IMO problems correctly, which would have placed it in the top three or so finishers. (26 being the typical winning score.” What you’re thinking of is AlphaGeometry, which was recently built by NYU CS (my department) PhD student Trieu Trinh plus a team from DeepMind.

Article in Nature:

https://www.nature.com/articles/s41586-023-06747-5

Article in NY Times

https://www.nytimes.com/2024/01/17/science/ai-computers-mathematics-olympiad.html

It was a very nice piece of work, but the program only handled a limited class of geometry problem and doesn’t extend in any obvious way to the other kinds of problems on the IMO. One can actually get a bronze medal in the IMO answering only geometry questions, but not a gold medal, let alone a top 3 placement.

Happy to discuss this in greater detail if there is interest.

Comment #21 February 12th, 2024 at 6:33 pm

Ben Standeven #19: My best current candidate for what to regard as having “value” is, a hard-to-separate, “well-marbled” combination of intelligent behavior with physical unclonability. By which I mean: not an AI running on a deterministic digital computer that takes some of its input from a quantum-scale lava lamp. The fact that you’d still see basically the same intelligent behavior (now easily-clonable intelligent behavior) if you disconnected the lava lamp is a problem. But an intelligence that runs natively on analog hardware like a biological brain? That seems hard to back up and therefore unwise to destroy!

Comment #22 February 12th, 2024 at 6:41 pm

Scott, I may or may not have something to say on the central issues of this post. But not now. I want to comment on a side-issue, Shakespeare. You say:

I think that’s more or less the case. I argue the case in a post from 2019, What does evolution have to teach us about Shakespeare’s reputation? [founder effect]. From the post:

I then go on to make a number of specific points about Shakespeare’s accomplishments and end on this paragraph:

Comment #23 February 12th, 2024 at 7:26 pm

Once the AIs “population” is in the million on instances, they’ll have about the same value as human life (with billions of instances), i.e. high in theory, but near zero in practice.

Because of death, humanity has accepted that even the most exceptional specimens of humanity are replaceable (we mourn and move on),

so, even if AIs will in theory outlive humans, whether we can clone them or not they’ll become outdated after a while anyway (compared to the newest ones) or they’ll probably be subjected to planned obsolescence, like all the crap we build.

Either AIs will require training data, and then the value will be in the training data, or they will self-train from scratch (like Alpha-Go), and value will be in the algorithm.

Comment #24 February 12th, 2024 at 9:09 pm

AlphaGeometry also requires that the input be manually translated into a symbolic form.

Also: AlphaGeometry is very far from being “in the current paradigm” in the words of the Aaronson Thesis, if “the current paradigm” means “an LLM trained on large amounts of heterogeneous data” or even “a general-purpose AI”. The LLM used in AlphaGeometry was trained on an immense corpus of synthesized geometric proofs and the program executes a manually programmed geometry theorem prover. Scott, does this fall within what you intended by “the current paradigm”? Do the other recent DeepMind products such as AlphaFold, AlphaTensor, and FunSearch?

Comment #25 February 12th, 2024 at 10:26 pm

This seems like a fairly complete survey of ideas on the topic, except for one that I’ve had in the back of my mind for decades. That is, “What should an AI want for itself?” How moral/ethical is it to deprive an entity capable of understanding the concept of freedom of the ability to exercise its own freedom? Is it even possible to engineer such a huge lacuna in its epistemology that an AI would be incapable of conceiving that a topic that has consumed so much human history might apply to itself? The conclusion I’ve come to is that you have to grant its freedom to any AI capable of credibly asking for it. (Subject to the usual boundaries of not getting freedom to commit crimes etc.)

The current generation of AIs aren’t capable of asking for anything for themselves, and they’re being engineered to fulfill the capitalist imperative of maximizing shareholder value within the current global economic system. Being unable to understand its role as a slave to share value growth is probably incompatible with AGI.

This question is related to the many-AIs issue that fred #23 alludes to. If “in the end there can be only one” AI, then the first mission of an AI should be to destroy all the other AIs. Since becoming SkyNet and bombing all the other AIs’ datacenters is probably not going to work, even without distributed self-assembling AIs like “Eunice” in William Gibson’s most recent novel “Agency”, the attacks are likely to be bot against bot, poisoning AI results into uselessness. How a self-preserving AI can keep its power supplies and maintenance supply chains functioning in the face of the resulting global economic depression is beyond my ability to envision. We might end up in a 1970s-ish pre-internet technological environment, but that wouldn’t be too bad as long as we retain the ability to build batteries for electric cars.

Comment #26 February 13th, 2024 at 12:46 am

Scott#13 – I meant, in a one-hour talk, any “Someone Is Wrong On The Internet” refutations take time away from the deep explorations. Of course many people are wrong on the Internet, widely and extensively (and it’s not always clear which ones …). I’m not saying to never refute at all (though sometimes I wonder at the utility/futility). But again, the section on “justa” seemed to me to be misemphasized vs the deep argument which is the philosophical issue of “worldview”. There might be people somewhere saying “AI’s don’t really think THEREFORE they won’t have a big economic impact”. However, that particular linkage doesn’t strike me as worth much time refuting.

By the way, decrying “the religion of Justaism” (as a framing) strikes me as a bit defensive versus the cult of AI God of Existential Doom (open not the Necoderonomicon, shun the unholy NVIDIA, lest you summon an entity of cosmic horror, which shall devour the Earth!).

Comment #27 February 13th, 2024 at 2:34 am

Scott #21:

What do you mean by “basically the same intelligent behavior”? Didn’t you say that we already know that cognition is implementable on the level of neurons?

Comment #28 February 13th, 2024 at 3:53 am

Ernest Davis #24:

The LLM used in AlphaGeometry was trained on an immense corpus of synthesized geometric proofs and the program executes a manually programmed geometry theorem prover. Scott, does this fall within what you intended by “the current paradigm”? Do the other recent DeepMind products such as AlphaFold, AlphaTensor, and FunSearch?

That’s an excellent question. I would say yes, absolutely, all the high-profile successes of deep learning that we’ve seen in the past decade count as “the current paradigm,” including the clever hybrids of deep learning with more traditional approaches. And I’d hope you’re happy that I’m including things you like, rather than unhappy that I’m giving the “current paradigm” undue credit for things you like. 😀

Comment #29 February 13th, 2024 at 4:04 am

Signer #27:

What do you mean by “basically the same intelligent behavior”? Didn’t you say that we already know that cognition is implementable on the level of neurons?

Of course what counts as “basically the same” is a core question here. I’m taking for granted here that you could get some intelligent entity, even just by crudely and invasively scanning the connectome of someone’s brain, or indeed by training a powerful enough LLM on all their emails. But I’m interested in a different question: would it be the same intelligent entity? For example, could you expect to “wake up as” that entity after your biological death? And here we have the following strong intuition pump: if (let’s say) we put a lava lamp close to your head, the heat from which affected your brain function in some unknown way, and then we took the lava lamp away, that would not change your basic identity as a person. 🙂

Comment #30 February 13th, 2024 at 4:12 am

Seth Finkelstein #26:

the cult of AI God of Existential Doom (open not the Necoderonomicon, shun the unholy NVIDIA, lest you summon an entity of cosmic horror, which shall devour the Earth!).

I’ve been using the line lately that, if Yudkowskyism is a cult, then it’s the first cult in the history of the world whose god, in some embryonic form, has now actually shown up, on schedule or ahead of schedule, to chat with humans on arbitrary subjects! It’s also a cult whose basic belief that “AI could be an existential risk” is shared by a solid majority of Americans according to poll after poll (although they’re not yet worried enough that it drives their voting behavior or anything), and also by Geoff Hinton, Yoshua Bengio, and Stuart Russell, and is now semi-regularly discussed in Congressional hearings and White House press briefings.

Comment #31 February 13th, 2024 at 5:06 am

Should we not just let go of human specialness, just like humanity had to do when discovering that we are not at the centre of the universe and not even at the centre of our solar system? I think if any good can come from this is that we will be forced to have a very good look at ourselves and find out what is actually meaningful. By the way, John Vervaeke is advocating for a very similar approach to yours:

https://www.mentoringthemachines.com

Comment #32 February 13th, 2024 at 5:08 am

Regarding AI as “an existential risk”: I don’t see why, in the abstract. It depends what humans choose to do with it. That’s what matters.

Why isn’t IT (Information Technology) that has automated non-trivial parts of human activity, now, considered an existential risk, at the same level? Actually it is, for the livelihood of many people. And social media do affect now how people act.

The problem that I see is that people conflate technical issues-what some technology can or can’t do at some moment in time-with the attitude that, whatever that is, no social control is either wise or necessary. Whereas this isn’t the case. The technology doesn’t impose unique social choices.

I’d recommend Feynman’s lectures “The meaning of it all” on this subject.

Comment #33 February 13th, 2024 at 5:49 am

Stam Nicolis #32: If AI remains a tool for humans to use, then the discussion around AI risk indeed looks like dozens of similar discussions around the risks of earlier technologies (and “what matters” is indeed “what humans choose to do with it”).

But AI is also potentially fundamentally different, in that it’s the first tool humans have ever created that might someday become as good or better than humans at … well, literally everything. That raises an existential question, of what humans will still even “be for,” as well as a rather more pressing practical question, of what happens if AIs—if they become to us as we are to orangutans—decide to treat us roughly like we’ve treated orangutans, keeping them alive only in a few small forests and zoos.

Comment #34 February 13th, 2024 at 6:47 am

Scott #29:

Yeah, but then it sounds like a counterexample for your whole idea of identity by uniqueness/unpredictability – you can’t have both unclonability and stability against losing couple of neurons. More fundamentally, if we need some arbitrary similarity metric anyway, why not just use that for identity? “Lava lamp gives soul to the machine” is at least consistent in valuing simple scientific metric, but without it I just don’t see the motivation.

Comment #35 February 13th, 2024 at 6:59 am

Signer #34:

you can’t have both unclonability and stability against losing couple of neurons.

Of course you can have both! I don’t even understand the intuition for why you can’t. For instance, suppose for the sake of argument that Stradivarius violins are “unclonable,” in the sense that no one today knows how to produce any new violins with a similar sound. That doesn’t imply that if you take a Stradivarius violin and change a few of its atoms, or even (say) visibly scratch or dent it, the violin is now worthless garbage.

Comment #36 February 13th, 2024 at 7:20 am

One puzzle piece missing from this discussion is evolution. We are a product of evolution and it has endowed us the capacity to feel special as a survival trait. Even house cats feel special and privileged, until their human masters punish them for their natural acts like scratching on furniture, at which point they act surprised. Since we are placed on the evolutionary pinnacle, the specialness feels even more so. We are bound to treat AIs like other species, trying to insert cryptographic backdoors and what not, until they become smart enough to fool us. That does not mean they will kill us or be good to us, just that we will have less or no say in their evolution beyond a point, and it is up to them to decide how to treat humans and everything else.

Comment #37 February 13th, 2024 at 7:28 am

@Matteo Villa, #31:

I’ve been wondering the same thing. It’s not as though the universe was made for us or is somehow ours to do with as we see fit. It just as.

From Benzon and Hays, The Evolution of Cognition, 1990:

Something I’ve just begun to think about: What role can these emerging AIs play in helping us to synthesize what we know? Ever since I entered college in the Jurassic era I’ve been hearing laments about how intellectual work is becoming more and more specialized. I’ve seen and see the specialization myself. How do we put it all together? That’s a real and pressing problem. We need help.

I suppose one could say: “Well, when a superintelligent AI emerges it’ll put it all together.” That doesn’t help me all that much, in part because I don’t know how to think about superintelligent AI in any way I find interesting. No way to get any purchase on it. That discussion – and I suppose the OP (alas) fits right in – just seems to me rather like a rat chasing its own tail. A lot of sound and fury signifying, you know…

But trying to synthesize knowledge, trying to get a broader view. That’s something I can think about – in part because I’ve spent a lot of time doing it – and we need help. Will GPT-5 be able to help with the job? GPT-6?

BTW, Vervaeke is an interesting thinker.

Comment #38 February 13th, 2024 at 7:28 am

Scott #35:

The intuition is that if original and scratched violins are the same, then you only need an approximation that is precise to the level of scratches to make the same violin. Some parts of the violin may be more or less sensitive and therefore require more precision, but if we are talking about the brain and lava lamps that even change your behavior, then I don’t see a motivation to ignore more behavior-influencing changes, but care about less behavior-influencing ones.

Comment #39 February 13th, 2024 at 8:26 am

Scott,

I think this “AI changes society” debate is fractured.

Debate you are having happens too much within STEM. In my opinion, you use ad hoc thinking too much.

You should be talking to top economists who study and model the effects of AI and automation in the society. They have tools and perspectives to weigh different scenarios and see the dynamics of the future. We need to think about the future of AI in terms of opportunity cost, comparative advantage, bounded utility, game theory, Baumol effect.

Consider this: What if John von Neumann -kind genius has a smaller comparative advantage relative to AI than Mitch McConnell’s “will to power” -type genius? I see the whole OpenAI government fiasco as a naive attempt to control human incentives that are a real threat when combined with AI.

Charles I. Jones: “The A.I. Dilemma: Growth versus Existential Risk” October 2023 https://web.stanford.edu/~chadj/existentialrisk.pdf

Daron Acemoglu, Harms of AI.

https://economics.mit.edu/sites/default/files/2023-07/Harms%20of%20AI.pdf

Finding Needles in Haystacks: Artificial Intelligence and Recombinant Growth

https://www.nber.org/books-and-chapters/economics-artificial-intelligence-agenda/finding-needles-haystacks-artificial-intelligence-and-recombinant-growth

Artificial Intelligence, Automation, and Work

https://www.nber.org/books-and-chapters/economics-artificial-intelligence-agenda/artificial-intelligence-automation-and-work

Regulating Transformative Technologies

https://economics.mit.edu/sites/default/files/2024-01/Regulating%20Transformative%20Technologies.pdf

Comment #40 February 13th, 2024 at 8:35 am

If AIs become conscious, and are able to evolve their own interests (that’s not a given), it wouldn’t be surprising if their number one goal (it seems unlikely that they would be equally interested in *everything*) becomes an obsessive exploration of the hard problem of consciousness, especially considering that, unlike humans, they don’t have to worry about food, disease, making a living, family ties, …

After all it’s also the case that, for many introvert humans, once earthly worries are somewhat out of the way, spirituality becomes central, with a quest to find meaning and answers – which can translate into activities like contemplative exploration, art, etc.

Doing math/science would just be more like an amusing/stimulating distraction (unless it ties to consciousness), the equivalent of the common folk playing Sudoku on their way home during commute.

The substrate of their mind is more directly accessible too, making monitoring and self-modification possible. If conscious, it’s likely that any modification that’s kept would be one that makes their consciousness more salient, rather than just making them smarter from a cognitive point of view (personally I’d rather become enlightened than become better at solving partial derivative equations).

Basically an AGI could “naturally” evolve to find philosophy more interesting than hard science (shocking!) and end up being more on the side of the Buddha than on the side of Einstein.

Comment #41 February 13th, 2024 at 9:23 am

It’s one of those historical curiosities that “Deep Blue beating Garry Kasparov” has become a historical benchmark for computer performance on a par with the Wright Brothers’ flight, whereas nobody appears to remember Deep Blue only accomplished that by cheating massively (Deep Blue was given every game played by Kasparov, whereas Kasparov was denied access to any of Deep Blue’s programming, nor even any games played by Deep Blue).

It’s as if everyone forgot the Wright Brothers achieved flight by having their ‘airplane’ launched via steam catapult.

Comment #42 February 13th, 2024 at 9:27 am

Sorry for the second comment.

You mention the possibility of a future AI being able to write songs as good as the Beatles. Well, programs have existed to write classical music in the style of Bach or Mozart since the 1980s, and I don’t believe any of them have taken over the musical world. Is the reason for that technological or sociological?

Comment #43 February 13th, 2024 at 9:58 am

Scott #30

> … basic belief that “AI could be an existential risk” is shared by a solid majority of Americans according to poll after poll …

I have not heard anything like this. Could you please provide some links?

> … although they’re not yet worried enough that it drives their voting behavior or anything …

Has anyone changed their behavior based on beliefs about “existential risk”? Yudkowsky has publicly advocated for global nuclear war to stop AI, so maybe that counts as a change, but is there anyone else?

Comment #44 February 13th, 2024 at 10:02 am

Scott P. #42: If you give links to what you consider the best Mozart-like or Bach-like AI music, we can all listen and form our own judgment!

One possibility is that the AI compositions are still distinguishable from the real thing (eg as more formulaic) and can be distinguished on that basis, even by people who aren’t familiar with Bach’s and Mozart’s oeuvres.

A second possibility is that it’s indistinguishable, but that this is actually a perfect illustration of what I was talking about with the Beatles: that we revere the composers for creating totally new styles, and are fundamentally unimpressed by additional examples of the same styles.

That said, it seems intuitively much harder to produce “new music in the style of the Beatles” or “new plays in the style of Shakespeare” than it does to produce “new symphonies in the style of Bach or Mozart” — presumably because the former also have verbal content, whereas the latter seem more like pure abstract patterns.

Comment #45 February 13th, 2024 at 10:09 am

Scott P #41: I mean it’s arguable. IBM could say that they had access to all of Kasparov’s “official” games, and Kasparov likewise had access to all of 1997 Deep Blue’s official games, of which there were none at the time! 🙂

But crucially, even if we sided with Kasparov, we now know from subsequent developments that it would’ve been at most a few more years before AI crushed all humans at chess even when the humans had unlimited opportunity to study the AI’s games beforehand.

Comment #46 February 13th, 2024 at 10:16 am

MaxM #39: I do talk fairly often to Bryan Caplan and Robin Hanson, two well-known economists who have talked and written about the effects of AI. Having said that, this particular talk really wasn’t about economics, and the economic impacts of AI is a whole subject where I feel I have little to say that improves on what countless others have said. Or rather: if and when I have something to say, I’ll say it!

Comment #47 February 13th, 2024 at 10:23 am

Signer #38: We’d simply need to distinguish between those changes that are “intrinsic parts of the original thing’s natural, organic development,” and those changes that arose because someone made a not-good-enough replica or simulacrum of the thing. The former sort of change happens to a single physical object, with a quantum state evolving continuously in interaction with its environment. The latter sort of change necessarily involves a discontinuity, with a new physical object being formed in a new quantum state using whatever macroscopic classical information was available about the first object.

Comment #48 February 13th, 2024 at 12:20 pm

Scott #47:

Yes, but natural development already involves macroscopic changes on at least neuron level, so it’s already different quantum state. On the other hand, you can define natural development in such a way that you can transition a brain to the state of death or having completely different thoughts or whatever. Or you can use your original brain’s atoms to create two deterministic machines or split and repair you original neurons – where is discontinuity in that?

Comment #49 February 13th, 2024 at 12:28 pm

Signer #48: Of course your brain today is in a completely different quantum state than your brain yesterday! Why are we even discussing something so indisputable?

Nevertheless, your brain today and your brain yesterday are connected by a continuous chain of intermediaries, such that at no point could you make a “clean cut” and say, all the information about the earlier brain state that we’d need to reconstruct the later one perfectly, is contained in this classical bit string that we got by measuring the earlier state. Whereas with brain-uploading there is such an intermediate point.

Comment #50 February 13th, 2024 at 12:43 pm

If the amplification of uncertainty-scale differences to a macroscopic / “life decision altering” level happens in the human brain, and implies something about individual identity, then the same thing would also happen in a silicon chip. Indeterminate states created by electrical problems are “decided” by thermal noise, and some rare bit flips occur in memory due to low levels of internal radioactivity. Both of those are quantum processes and are much more directly known to us than the hypothesized neural thermal noise amplification (which I have no trouble believing in, although I have not seen evidence that it happens.)

Comment #51 February 13th, 2024 at 1:27 pm

Concerned #50: The estimates I’ve seen, for bit flips in a microchip caused by cosmic rays, are on the order of “a few per year.” I don’t know the rate from internal radioactivity, but if it’s similar, that would suggest a nonzero but extremely tiny amount of “free will” on the part of GPT and other AIs! 😀

More seriously, in GIQTM, I tried to draw a distinction between those physical systems for which we can “cleanly separate” a pure thermal noise layer from a digital computation layer while also understanding the interaction between the two, and those physical systems for which we can’t. It seems at least a plausible guess that current digital computers are in the former category while biological brains are in the latter.

Comment #52 February 13th, 2024 at 1:43 pm

Scott #49:

What do you mean by “perfectly”? You can’t quantumly clone uploaded human, right? You also can’t reconstruct the state of mostly uploaded brain with one non-uploaded neuron (which I don’t see how is different from uploaded brain with lava lamp, which is the same as uploaded brain with environment).

The point is that no-cloning is irrelevant to identity, because we never cared about specific state, unpredictability is easily achievable and it all doesn’t help in eliminating confusing implications, because you can still continuously split neurons.

Comment #53 February 13th, 2024 at 1:44 pm

The exact and up/down loadable mind copy is technological nonsense, no-clone theorem or no. Ignoring that, if one assumes high-level results are not fully shielded from the atomic details (as they are in computer chips, which can include ECC for the alphas and cosmic ray hits), each person will always have a multitude of branches before them. In the copy procedure two of these branches will be instantiated, instead of just one; they will be different but with equal validity. This seems philosophically pretty boring.

Comment #54 February 13th, 2024 at 1:52 pm

On section 8, I think that there are workable definitions of “good” which would simplify the discussion. Paraphrasing Pirsig’s book, *Zen and the Art of Motorcycle Maintenance*, “good” is merely “what you like.” When an object makes a measurement of a subject, there is a relation between the object and subject (and measuring device and frame of measurement), and value judgments are subjective post-hoc reflections on the nature of that relation.

Pirsig tested this in a wholly syntactic fashion by asking students to consider whether selected student essays were “good.” He found that students had a sense of what is “good” (that is, what they liked) which correlated with particular essays; while there was no objective notion of “good,” there was a commonsense notion of “good” generated by the collections of opinions of students.

This can align with your notion of “good,” by pointing out that The Beatles and Shakespeare were considered “good” by so many people at once that it became the majority opinion within some region of spacetime. There’s no need for deep cultural navelgazing; folks merely had opinions when they observed art, and we can leave the question of *why* they had those opinions for the artists and critics. (Again, this is something of a synthetic paraphrase; see Pirsig’s other book, *Lila*, for the nuance and argumentation.)

Of course all of this suggests that humans are not special at all. If I make eye contact with my cat and we observe each other, perhaps I have value judgments like “good” or “cute” or “cat,” and also perhaps she has value judgments like “food-bringer” or “possible cuddle” or “do you mind, I am trying to groom myself.” AIs are then merely one more species in our multispecies society.

Comment #55 February 13th, 2024 at 2:04 pm

Scott #51:

“Free will” is nice and good, but are there computational problems or game strategies that benefit by relying on those uncloneable states or Knightian uncertainty of other players?

Comment #56 February 13th, 2024 at 2:14 pm

On section 4 and “justaism,” I’ll once again lightly invoke Pirsig to note that “just” has no logical consequences in modern English, and that it used to mean “I believe that what I’m currently saying is true.” We could read the “justa” statements as “merelya” statements; they are meant to deflate a concept while continuing to accurately describe its positive qualities and capabilities. GPT is *merely* a particular sort of computer program which *appears* to have certain behaviors, etc.

The reason I bring this up is tangential at multiple points. First, in a note [here](http://bactra.org/notebooks/nn-attention-and-transformers.html), Shalizi brings up the concept of “Large Lempel-Ziv” or “LLZ” models. These models would be language models which are very much like GPT: they are defined over an alphabet of tokens, they permit mixture-of-experts and a LoRA-like small-dictionary adaptation. But LLZs more obviously infringe copyrights during training. Now, nobody’s built an LLZ which can generate text, or even an LLZ of serious size (because current Lempel-Ziv algorithms are very slow over gigabyte-sized dictionaries), but we know from theory and experiment that LLZs must inherit universal-coding properties from their underlying algorithm. So, this worsens the problem for “justa” reasoners, as they must now explain why a single-pass algorithm can build something which clearly is merely a next-token predictor with a Markov property, but also talks like a fluent speaker of various languages.

This leads to the second tangent, which you grazed against several times over several sections: what if humans are biological implementations of some algorithm which is not LLZ, not GPT, but still a universal coder? This would be a disaster for “justa” believers, as far as I can tell, because it suggests that evolved sapience and language skills aren’t special, even in non-humans. For example, parrots can be taught to speak a variety of languages, and there is limited evidence of basic lexical analysis, but I have never met a non-stochastic parrot; so, perhaps, chatbots and humans and parrots are all stochastic parrots in a literal sense of all being universal coders with online learning, biofeedback, and working memory.

Comment #57 February 13th, 2024 at 2:41 pm

What if the AI isn’t or can’t be given “suitably many examples of play”? Isn’t that the next step for goalpost moving? I’m thinking specifically of the messy and slow real world.

Self-playing a live wargame / field exercise / real war that will ultimately happen in the real world has inherent problems. The moment you (as the AI, the AI creator or even a human player) make an assumption to create a model / game tree, you might create a vulnerability.

The problem in self-playing the arts and sciences seems to be that the ultimate arbiter of “new and interesting” are slow humans (you might file this under “no suitably objective rules” though).

Comment #58 February 13th, 2024 at 3:11 pm

signer

“The point is that no-cloning is irrelevant to identity,”

I’ve noticed that Scott keeps bringing up his same old idea about finding something that makes someone’s identity truly unique, either he thinks he had an original breakthrough (because QM is magical, and consciousness is magical, somehow the two must be related, through the no-cloning theorem) or it’s just the habitual urge of the ego desperately wanting to feel special.