Google’s Sycamore chip: no wormholes, no superfast classical simulation either

Update (Dec. 6): I’m having a blast at the Workshop on Spacetime and Quantum Information at the Institute for Advanced Study in Princeton. I’m learning a huge amount from the talks and discussions here—and also simply enjoying being back in Princeton, to see old friends and visit old haunts like the Bent Spoon. Tomorrow I’ll speak about my recent work with Jason Pollack on polynomial-time AdS bulk reconstruction. [New: click here for video of my talk!]

But there’s one thing, relevant to this post, that I can’t let pass without comment. Tonight, David Nirenberg, Director of the IAS and a medieval historian, gave an after-dinner speech to our workshop, centered around how auspicious it was that the workshop was being held a mere week after the momentous announcement of a holographic wormhole on a microchip (!!)—a feat that experts were calling the first-ever laboratory investigation of quantum gravity, and a new frontier for experimental physics itself. Nirenberg asked whether, a century from now, people might look back on the wormhole achievement as today we look back on Eddington’s 1919 eclipse observations providing the evidence for general relativity.

I confess: this was the first time I felt visceral anger, rather than mere bemusement, over this wormhole affair. Before, I had implicitly assumed: no one was actually hoodwinked by this. No one really, literally believed that this little 9-qubit simulation opened up a wormhole, or helped prove the holographic nature of the real universe, or anything like that. I was wrong.

To be clear, I don’t blame Professor Nirenberg at all. If I were a medieval historian, everything he said about the experiment’s historic significance might strike me as perfectly valid inferences from what I’d read in the press. I don’t blame the It from Qubit community—most of which, I can report, was grinding its teeth and turning red in the face right alongside me. I don’t even blame most of the authors of the wormhole paper, such as Daniel Jafferis, who gave a perfectly sober, reasonable, technical talk at the workshop about how he and others managed to compress a simulation of a variant of the SYK model into a mere 9 qubits—a talk that eschewed all claims of historic significance and of literal wormhole creation.

But it’s now clear to me that, between

(1) the It from Qubit community that likes to explore speculative ideas like holographic wormholes, and

(2) the lay news readers who are now under the impression that Google just did one of the greatest physics experiments of all time,

something went terribly wrong—something that risks damaging trust in the scientific process itself. And I think it’s worth reflecting on what we can do to prevent it from happening again.

This is going to be one of the many Shtetl-Optimized posts that I didn’t feel like writing, but was given no choice but to write.

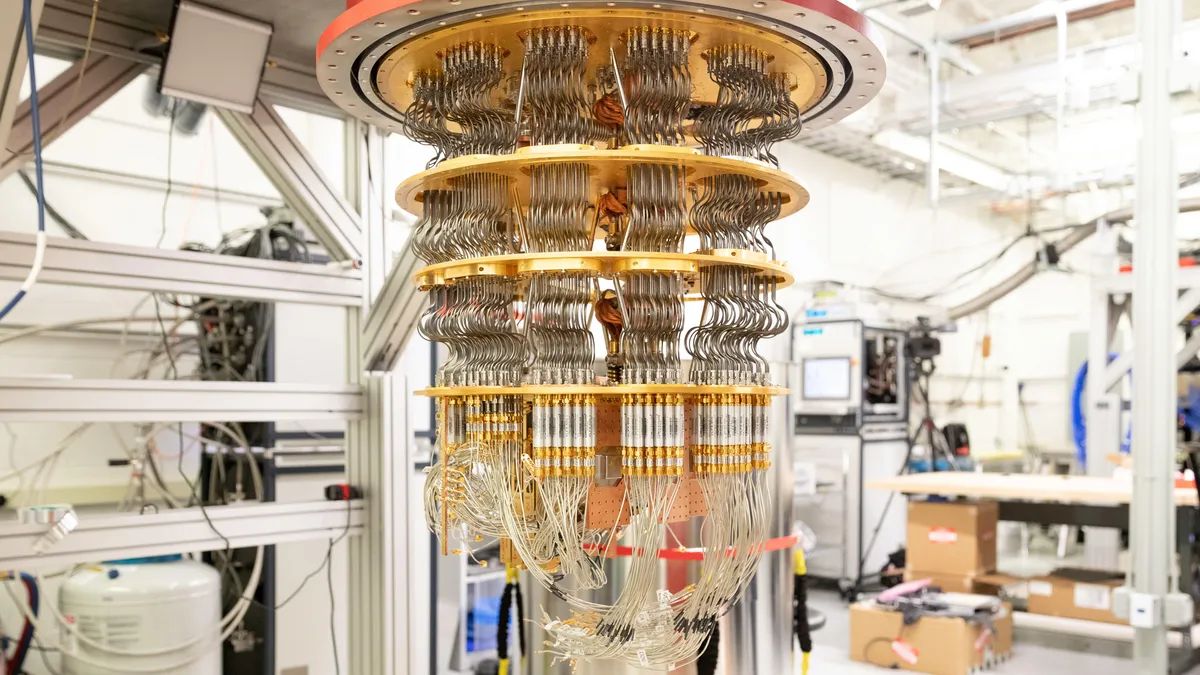

News, social media, and my inbox have been abuzz with two claims about Google’s Sycamore quantum processor, the one that now has 72 superconducting qubits.

The first claim is that Sycamore created a wormhole (!)—a historic feat possible only with a quantum computer. See for example the New York Times and Quanta and Ars Technica and Nature (and of course, the actual paper), as well as Peter Woit’s blog and Chad Orzel’s blog.

The second claim is that Sycamore’s pretensions to quantum supremacy have been refuted. The latter claim is based on this recent preprint by Dorit Aharonov, Xun Gao, Zeph Landau, Yunchao Liu, and Umesh Vazirani. No one—least of all me!—doubts that these authors have proved a strong new technical result, solving a significant open problem in the theory of noisy random circuit sampling. On the other hand, it might be less obvious how to interpret their result and put it in context. See also a YouTube video of Yunchao speaking about the new result at this week’s Simons Institute Quantum Colloquium, and of a panel discussion afterwards, where Yunchao, Umesh Vazirani, Adam Bouland, Sergio Boixo, and your humble blogger discuss what it means.

On their face, the two claims about Sycamore might seem to be in tension. After all, if Sycamore can’t do anything beyond what a classical computer can do, then how exactly did it bend the topology of spacetime?

I submit that neither claim is true. On the one hand, Sycamore did not “create a wormhole.” On the other hand, it remains pretty hard to simulate with a classical computer, as far as anyone knows. To summarize, then, our knowledge of what Sycamore can and can’t do remains much the same as last week or last month!

Let’s start with the wormhole thing. I can’t really improve over how I put it in Dennis Overbye’s NYT piece:

“The most important thing I’d want New York Times readers to understand is this,” Scott Aaronson, a quantum computing expert at the University of Texas in Austin, wrote in an email. “If this experiment has brought a wormhole into actual physical existence, then a strong case could be made that you, too, bring a wormhole into actual physical existence every time you sketch one with pen and paper.”

More broadly, Overbye’s NYT piece explains with admirable clarity what this experiment did and didn’t do—leaving only the question “wait … if that’s all that’s going on here, then why is it being written up in the NYT??” This is a rare case where, in my opinion, the NYT did a much better job than Quanta, which unequivocally accepted and amplified the “QC creates a wormhole” framing.

Alright, but what’s the actual basis for the “QC creates a wormhole” claim, for those who don’t want to leave this blog to read about it? Well, the authors used 9 of Sycamore’s 72 qubits to do a crude simulation of something called the SYK (Sachdev-Ye-Kitaev) model. SYK has become popular as a toy model for quantum gravity. In particular, it has a holographic dual description, which can indeed involve a spacetime with one or more wormholes. So, they ran a quantum circuit that crudely modelled the SYK dual of a scenario with information sent through a wormhole. They then confirmed that the circuit did what it was supposed to do—i.e., what they’d already classically calculated that it would do.

So, the objection is obvious: if someone simulates a black hole on their classical computer, they don’t say they thereby “created a black hole.” Or if they do, journalists don’t uncritically repeat the claim. Why should the standards be different just because we’re talking about a quantum computer rather than a classical one?

Did we at least learn anything new about SYK wormholes from the simulation? Alas, not really, because 9 qubits take a mere 29=512 complex numbers to specify their wavefunction, and are therefore trivial to simulate on a laptop. There’s some argument in the paper that, if the simulation were scaled up to (say) 100 qubits, then maybe we would learn something new about SYK. Even then, however, we’d mostly learn about certain corrections that arise because the simulation was being done with “only” n=100 qubits, rather than in the n→∞ limit where SYK is rigorously understood. But while those corrections, arising when n is “neither too large nor too small,” would surely be interesting to specialists, they’d have no obvious bearing on the prospects for creating real physical wormholes in our universe.

And yet, this is not a sensationalistic misunderstanding invented by journalists. Some prominent quantum gravity theorists themselves—including some of my close friends and collaborators—persist in talking about the simulated SYK wormhole as “actually being” a wormhole. What are they thinking?

Daniel Harlow explained the thinking to me as follows (he stresses that he’s explaining it, not necessarily endorsing it). If you had two entangled quantum computers, one on Earth and the other in the Andromeda galaxy, and if they were both simulating SYK, and if Alice on Earth and Bob in Andromeda both uploaded their own brains into their respective quantum simulations, then it seems possible that the simulated Alice and Bob could have the experience of jumping into a wormhole and meeting each other in the middle. Granted, they couldn’t get a message back out from the wormhole, at least not without “going the long way,” which could happen only at the speed of light—so only simulated-Alice and simulated-Bob themselves could ever test this prediction. Nevertheless, if true, I suppose some would treat it as grounds for regarding a quantum simulation of SYK as “more real” or “more wormholey” than a classical simulation.

Of course, this scenario depends on strong assumptions not merely about quantum gravity, but also about the metaphysics of consciousness! And I’d still prefer to call it a simulated wormhole for simulated people.

For completeness, here’s Harlow’s passage from the NYT article:

Daniel Harlow, a physicist at M.I.T. who was not involved in the experiment, noted that the experiment was based on a model of quantum gravity that was so simple, and unrealistic, that it could just as well have been studied using a pencil and paper.

“So I’d say that this doesn’t teach us anything about quantum gravity that we didn’t already know,” Dr. Harlow wrote in an email. “On the other hand, I think it is exciting as a technical achievement, because if we can’t even do this (and until now we couldn’t), then simulating more interesting quantum gravity theories would CERTAINLY be off the table.” Developing computers big enough to do so might take 10 or 15 years, he added.

Alright, let’s move on to the claim that quantum supremacy has been refuted. What Aharonov et al. actually show in their new work, building on earlier work by Gao and Duan, is that Random Circuit Sampling, with a constant rate of noise per gate and no error-correction, can’t provide a scalable approach to quantum supremacy. Or more precisely: as the number of qubits n goes to infinity, and assuming you’re in the “anti-concentration regime” (which in practice probably means: the depth of your quantum circuit is at least ~log(n)), there’s a classical algorithm to approximately sample the quantum circuit’s output distribution in poly(n) time (albeit, not yet a practical algorithm).

Here’s what’s crucial to understand: this is 100% consistent with what those of us working on quantum supremacy had assumed since at least 2016! We knew that if you tried to scale Random Circuit Sampling to 200 or 500 or 1000 qubits, while you also increased the circuit depth proportionately, the signal-to-noise ratio would become exponentially small, meaning that your quantum speedup would disappear. That’s why, from the very beginning, we targeted the “practical” regime of 50-100 qubits: a regime where

- you can still see explicitly that you’re exploiting a 250– or 2100-dimensional Hilbert space for computational advantage, thereby confirming one of the main predictions of quantum computing theory, but

- you also have a signal that (as it turned out) is large enough to see with heroic effort.

To their credit, Aharonov et al. explain all this perfectly clearly in their abstract and introduction. I’m just worried that others aren’t reading their paper as carefully as they should be!

So then, what’s the new advance in the Aharonov et al. paper? Well, there had been some hope that circuit depth ~log(n) might be a sweet spot, where an exponential quantum speedup might both exist and survive constant noise, even in the asymptotic limit of n→∞ qubits. Nothing in Google’s or USTC’s actual Random Circuit Sampling experiments depended on that hope, but it would’ve been nice if it were true. What Aharonov et al. have now done is to kill that hope, using powerful techniques involving summing over Feynman paths in the Pauli basis.

Stepping back, what is the current status of quantum supremacy based on Random Circuit Sampling? I would say it’s still standing, but more precariously than I’d like—underscoring the need for new and better quantum supremacy experiments. In more detail, Pan, Chen, and Zhang have shown how to simulate Google’s 53-qubit Sycamore chip classically, using what I estimated to be 100-1000X the electricity cost of running the quantum computer itself (including the dilution refrigerator!). Approaching from the problem from a different angle, Gao et al. have given a polynomial-time classical algorithm for spoofing Google’s Linear Cross-Entropy Benchmark (LXEB)—but their algorithm can currently achieve only about 10% of the excess in LXEB that Google’s experiment found.

So, though it’s been under sustained attack from multiple directions these past few years, I’d say that the flag of quantum supremacy yet waves. The Extended Church-Turing Thesis is still on thin ice. The wormhole is still open. Wait … no … that’s not what I meant to write…

Note: With this post, as with future science posts, all off-topic comments will be ruthlessly left in moderation. Yes, even if the comments “create their own reality” full of anger and disappointment that I talked about what I talked about, instead of what the commenter wanted me to talk about. Even if merely refuting the comments would require me to give in and talk about their preferred topics after all. Please stop. This is a wormholes-‘n-supremacy post.

Follow

Follow

Comment #1 December 2nd, 2022 at 5:27 pm

> Daniel Harlow’s argument to me was that, if you had two entangled quantum computers, one on Earth and the other in the Andromeda galaxy, and if they were both simulating SYK, and if Alice on Earth and Bob in Andromeda both uploaded their own brains into their respective quantum simulations, then he believes they could have the subjective experience of jumping into a wormhole and meeting each other in the middle—something that wouldn’t have been possible with a merely classical simulation.

Hey wait stop! Alice and Bob’s subjective experience would reflect one another’s? It sounds like we’re breaking no-signaling here – though perhaps we can’t get the information out of the computer? Still, that would be pretty exciting if true.

(I still have to read the paper, Quanta article, and Natalie Wolchover’s tweet thread defending it.)

Comment #2 December 2nd, 2022 at 5:39 pm

I’m confused as to how Harlow’s argument would work. Quantum simulations must run on real-world physics, so in particular cannot entail faster-than-light information transfer. Simulated wormholes would however allow effective FTL communication by shortening geodesic distances between far-away points.

Unless I’m missing something, it seems like the only resolutions are (1) that you cannot simulate practically useful wormholes using QC or (2) that building a QC out of ordinary qubits lets you rewire the very geometry of space. It seems like (1) would be more plausible.

Sorry if I’m missing something…I don’t know anything about quantum gravity so would appreciate corrections from anyone who knows more.

Comment #3 December 2nd, 2022 at 5:55 pm

Rand #1 (and SR #2): Yeah, that’s exactly the point. You can’t get the information out of the wormhole, or not without “going around the long way,” which can only happen at the speed of light. Thus, the assertion that Alice and Bob would meet in the middle has a “metaphysical” character: it’s suggested by GR, but it could only ever be experimentally confirmed by Alice and Bob themselves, not by those of us on the outside.

Comment #4 December 2nd, 2022 at 6:28 pm

Scott #3: Thanks for the explanation!

Comment #5 December 2nd, 2022 at 6:52 pm

Scott,

you write

“And yet, this is not a sensationalistic misunderstanding invented by journalists. Some prominent quantum gravity theorists themselves—including some of my close friends and collaborators—persist in talking about the simulated SYK wormhole as “actually being” a wormhole. What are they thinking?”

It doesn’t give me any pleasure to be the “I told you so” guy here, but… I told you so, Scott. More specifically:

https://scottaaronson.blog/?p=6457#comment-1939489

https://scottaaronson.blog/?p=6599#comment-1942272

Now, you have the entire field of QC being entangled in the worldwide press with actual creation of wormholes and other nonsense. Good luck in trying to clear up that mess in the lay public’s mind. Even more avoidable damage to the reputation and credibility of QC due to hype.

My question is, what were *you* thinking, Scott? You always seemed like the sensible guy to me. Evidently, the “QC adults” you mentioned in your first response to my concerns were not in the room when all of this was being cooked up… or maybe nobody really understands “the idea that you can construct spacetime out of entanglement in a holographic dual description” and that’s why all the confusion, unlike what you suggested to me in your other snarky response (“Once you understand it, you can’t un-understand it!”).

Anyway, I know no fundamental idea about your friends is going to change by whatever I could say. But I can tell you they will keep doing it, over and over again.

Comment #6 December 2nd, 2022 at 6:54 pm

Just wanted to say thank you for the last three postings. All have been extremely interesting.

Comment #7 December 2nd, 2022 at 7:05 pm

I think that, metaphysically, we have a Wigner’s-friend situation. After all, how do we ask Alice and Bob about their experience? We have to somehow measure their reported status from the quantum computers to which they were uploaded. Such measurements entangle Alice/Bob with the rest of the laboratory.

Comment #8 December 2nd, 2022 at 7:10 pm

Alex #5: This sort of thing—

(1) eliding the distinction between simulations and reality,

(2) making a huge deal over a small quantum computer calculating something even though we all knew perfectly well what the answer would be, and

(3) it all getting blown up even further by the press

—has been happening over and over for at least 15 years, so I didn’t exactly need you to warn me that it would keep happening! 🙂 Yes, I expect such things to continue, unless and until the incentives change. And as it does, I’ll continue to tell the truth as best I can!

Equally obviously, though, none of this constitutes an argument against the scientific merit of AdS/CFT itself, just like the tsunami of QC hype isn’t an argument against Shor’s algorithm. Munging everything together, and failing to separate out claims, would just be perpetuating the very practices one objects to when hypemeisters do it.

Comment #9 December 2nd, 2022 at 7:26 pm

Hi Scott,

I think I must be missing something in your argument.

If “A foofs B” has a dual description “C blebs D”, and we establish that A does indeed foof B, would you agree that it is equally true to say that C blebs D?

If so, wouldn’t it be correct to say that this experiment has created a wormhole? It’s not a wormhole in our regular universe’s spacetime, but perhaps it’s a wormhole in some… where (? not exactly clear on this).

And from this, perhaps it follows why an equally-precise simulation on the classical computer wouldn’t create a wormhole in the same way? (This part seems dubious to me–I want to say that A foofing B is different from a simulation of A foofing B–after all, no matter how well you simulate a hurricane, nobody gets wet. But I’m wondering if this instinct is in conflict with my early claim that “A foofs B” is equally true as “C blebs D”. Hmm.. now that I think about it, maybe this is actually what you meant by “bring a wormhole into actual physical existence every time you sketch one with pen and paper.”)

Comment #10 December 2nd, 2022 at 8:15 pm

Scott #8,

Well, you are the one that sounded surprised about your friends in the first place, that’s why I quoted your paragraph. Otherwise, I wasn’t going to make any comment.

As for AdS/CFT, I wasn’t even commenting about its scientific merits, but about the cloud of noise that surrounds it and ends, due to the very nature of that noise, producing these confusions. And, noise that is deliberately perpetuated by the practitioners themselves, as noted, again, in what you wrote. I saw that in QG before and more recently, from these very same people, in QC. That was all of my “warning”. Of course, in light of that, these recent hype developments in QC are hardly a surprise. I was just hoping that, knowing the tactics, maybe there was a chance to stop it.

Anyway, whatever.

Comment #11 December 3rd, 2022 at 1:57 am

I do not comment about the wormhole BS. As a physicist I find the whole story just hilarious ad a bad signal for how research is conducted today (with the aid of “improper” strategies..).

I have colleagues who simulated on the IBM QC other physics systems (even nuclei) using 5-7 bits: all these calculations were possible inverting a small matrix on a piece of paper.

On the QC they spent days and days for getting something usable. We are still so far away for easily using these systems effectively, but it is exciting and promising. I’d like to think that for now it is like playing with ENIAC or something like that.

As for quantum advantage: my English is probably not good enough to get the message so I ask: Were you saying that:

1) quantum supremacy with nisq hardware was ALREADY expected to break down after a certain N?

2) How do you overcome the limit? Error correction or higher-fidelity? Or both !? Or what 😉 ?

3) Have the Kalai’s arguments any relevance in this limit?

Thank you very much for the whole great post!

Comment #12 December 3rd, 2022 at 2:51 am

I call bullshit on Harlow’s assertion that “it seems possible that the simulated Alice and Bob could have the experience of jumping into a wormhole and meeting each other in the middle”.

The dual description doesn’t matter, because it still boils down to an experiment with two distant quantum computers sharing an entangled state. Alice’s actions change precisely nothing at Bob’s side, and vice-versa. It doesn’t matter if these actions are uploading oneself into the quantum computer, you still can’t get any information faster than light. It doesn’t matter if this information is somehow accessible only to the uploaded self, there can’t be any information there to start with.

Comment #13 December 3rd, 2022 at 3:19 am

https://pubs.acs.org/doi/pdf/10.1021/acsenergylett.2c01969

This is being hyped as a great QC advance. The paper looks careful and thorough. Is it?

Comment #14 December 3rd, 2022 at 6:02 am

Hi, long time reader, first time commenter.

Thanks for your post! (and your blog in general! Despite being a physics student, your blog piqued my interest toward computational complexity)

I still don’t get how Harlow’s argument is supposed to work. Even granting the consciousness uploading (which I guess is a discussion for another time), Alice and Bob would “experience” the simulation of SYK in (simulated) “real” spacetime, while if I understood correctly the wormhole is in the emergent holographic spacetime. All their experiences should be perfectly describable just in terms of QM.

Supposing that (rather than a simulation) we have an effective physical sistem in the real world which follows SYK hamiltonian and Alice and Bob have two pieces of this system. There still wouldn’t be an “actual” wormhole between them, it would just be an equivalent mathematical description.

Maybe I am missing something!

Comment #15 December 3rd, 2022 at 6:24 am

If this experiment would have consisted of a quantum simulation on “two entangled quantum computers,” then the hype would have been justified, even if only 9 qubits had been used from each quantum computer (i.e. 18 qubits in total).

Of course, I am not interested in an overhyped “entangled tardigrade”-like entanglement between quantum computers here, but in honest quantum entanglement, i.e. one which could be used for quantum teleportation. What I have in mind is an experiment with a source of entangled particles (probably spin-entangled photons), two quantum computers which are able to perform quantum computations using such “quantum inputs”, and some mechanism to “post-select” those computations on the two quantum computers which were actually entangled (by some sort of coincidence measurements).

Comment #16 December 3rd, 2022 at 7:12 am

Alex #5, It seems a bit much to me to excoriate Scott on his own blog for overhyped QC/QG results when he is debunking the hype in self-same post. Moreover, Scott was *the* *first* scientist quoted in mainstream press having done so. Sure, you can get after him for being friends with Lenny Susskind, but we don’t have to answer for our friends especially when he is explicitly calling them out on his own blog.

From my recollection Scott has always been of the – “I don’t know how I can help, but I’d be happy to try…” – when it comes to the whole It from Qubit business. And I haven’t seen him even once hyping the business or over even so much as getting over excited by it.

Alex, in short maybe you should direct your (perhaps well-motivated anger) at the people actually making the hyped up beyond the pale claims rather than those who are debunking them just because the latter happens to be nice and friendly to them.

Comment #17 December 3rd, 2022 at 7:28 am

Scott,

“Nevertheless, if true, I suppose some would treat it as grounds for regarding a quantum simulation of SYK as “more real” than a classical simulation.”

Even granting the outlandish assumptions I would still grant those grounds as entirely specious. The whole point of the wormhole hype is generating the insipid claim in the public’s mind of wormholes in our *actual* 3+1 physical world. You don’t push your work in the NYT and Quanta magazine if you’re trying to sell your work to your quantum gravity peers. You push it to conjure in the general public’s mind ideas coming from sci-fi movies like Interstellar, etc. What’s missing in this work – even when granting outrageous assumptions – is any connection whatsoever to that 3+1 physical world. There is *nothing* about this work that suggests the so-called SYK “wormholes” have anything to do with the wormholes from general relativity in our 3+1 universe. Re-enacting the math of the SYK “wormholes” on a QC does nothing to change that.

John Baez said on Woit’s blog that this is like if:

“a kid scrawls a picture of an inside-out building and the headline blares

BREAKING NEWS: CHILD BUILDS TAJ MAHAL!”

But I think the offense is considerably worse than that. To me it is as if a baby scrawls a crayon picture of random lines and dots and the parent raves to the media – “my kid built the Taj Mahal!” – all the while neglecting to mention:

* it is a drawing

* it is inside out

* it is in a universe where the laws of perspective/drawing may be entirely unrelated to our own

Comment #18 December 3rd, 2022 at 7:43 am

How embarrassing for the authors when future research of actual gravity in our universe discovers that it does not contain and cannot contain wormholes of the ER variety. OR maybe they forgot that wormholes haven’t been discovered in observation and are just still a conjectured (highly contested?) solution of GR?

Comment #19 December 3rd, 2022 at 8:02 am

I guess what confuses my very naive self about situations like this is that everyone I meet in academia (though not QC companies) working on QC agrees that hype like this wormhole business is bad for the field and swears they themselves would never do it. Yet somehow we get one of these papers every so often from very well established academic groups. Either I’m very bad at reading people, or I’m living under a rock and don’t interact with people who want hype – which do you think it is Scott?

Comment #20 December 3rd, 2022 at 9:10 am

Regarding the statement that there is nothing the experiment reveals that you couldn’t learn from a classical simulation, what about this section in the Quanta article:

“Surprisingly, despite the skeletal simplicity of their wormhole, the researchers detected a second signature of wormhole dynamics, a delicate pattern in the way information spread and un-spread among the qubits known as “size-winding.” They hadn’t trained their neural network to preserve this signal as it sparsified the SYK model, so the fact that size-winding shows up anyway is an experimental discovery about holography.

“We didn’t demand anything about this size-winding property, but we found that it just popped out,” Jafferis said. This “confirmed the robustness” of the holographic duality, he said. “Make one [property] appear, then you get all the rest, which is a kind of evidence that this gravitational picture is the correct one.””

This isn’t saying that there is a property which *could not* be detected in the classical simulation, but it at least seems to be a property that *was not* detected in the classical simulation, right? (Or did they see it first there? I haven’t read the actual scientific paper yet.)

And this is kind of nontrivial as a “thing learned about quantum gravity”, and not just for technical reasons. It’s nontrivial because AdS/CFT is already a conjecture. It’s a conjecture in the well-tested versions, but an even bigger conjecture is that it is in some sense “generic”: that there are lots of “CFT-ish” systems with “AdS-ish” duals. In this case, the system they were testing wasn’t N=4 SYM, or even SYK, but a weird truncation of SYK. The idea that “weird truncation of SYK” has a gravity dual is something the “maximal AdS/CFT” folks would expect, but that could not have been reliably predicted. And it sounds like, from those paragraphs, that the people who constructed this system were trying to get one particular gravity-ish trait out of it, but didn’t expect this other one. That very much sounds like evidence that “maximal AdS/CFT” covers this case, in a way that people didn’t expect it to, and thus like a nontrivial thing learned about quantum gravity.

Comment #21 December 3rd, 2022 at 9:12 am

Will #9:

Hmm.. now that I think about it, maybe this is actually what you meant by “bring a wormhole into actual physical existence every time you sketch one with pen and paper.”

Yup! 🙂

Comment #22 December 3rd, 2022 at 10:07 am

LK2 #11:

As for quantum advantage: my English is probably not good enough to get the message so I ask: Were you saying that:

1) quantum supremacy with nisq hardware was ALREADY expected to break down after a certain N?

Yes.

2) How do you overcome the limit? Error correction or higher-fidelity? Or both !? Or what 😉 ?

Yes. Higher fidelity, which in turn lets you do error-correction, which in turn lets you simulate arbitrarily high fidelities. We’ve understood this since 1996.

3) Have the Kalai’s arguments any relevance in this limit?

Gil Kalai believes that (what I would call) conspiratorially-correlated noise will come in and violate the assumptions of the fault-tolerance theorem, and thereby prevent quantum error-correction from working even in principle. So far, I see zero evidence that he’s right. Certainly, Google’s and USTC’s Random Circuit Sampling experiments saw no sign at all of the sort of correlated noise that Gil predicts: in those experiments, the total circuit fidelity simply decayed like the gate fidelity, raised to the power of the number of gates. If that continues to hold, then quantum error-correction will “””merely””” be a matter of more and better engineering.

Comment #23 December 3rd, 2022 at 10:23 am

Joseph Shipman #13: I just looked at that paper. On its face, it seems to commit the Original Sin of Sloppy QC Research: namely, comparing only to brute-force classical enumeration (!), and never once even asking the question, let alone answering it, of whether the QC is giving them any speedup compared to a good classical algorithm. (Unless I missed it!)

There’s a tremendous amount of technical detail about the new material they discovered, and that part might indeed be interesting. But none of it bears even slightly on the question you and I care about: namely, did quantum annealing provide any advantage whatsoever in discovering this material, compared to what they could’ve done with (e.g.) classical simulated annealing alone?

Comment #24 December 3rd, 2022 at 10:29 am

arbitrario #14: I should really let Harlow, or better yet one of the “true believers” in this, answer your question!

To me, though, it’s really a question of how seriously you take the ER=EPR conjecture. Do you accept that two entangled black holes will be connected by a wormhole, and that Alice and Bob could physically “meet in the middle” of that wormhole (even though they couldn’t tell anyone else)? If so, then I suppose I see how you get from there to the conjecture that two entangled simulated black holes should be connected by a simulated wormhole, and that a simulated Alice and Bob could meet in the middle of it, again without being able to tell anyone (even though now it’s metaphysically harder to pin down what’s even being claimed!).

Comment #25 December 3rd, 2022 at 10:55 am

Please correct me if I got anything wrong, but it sounds like we could do the Alice and Bob wormhole experiment in the near future. We just need to replace uploaded-brain Alice and Bob with much simpler probes (perhaps short text strings or simple computer programs?), and then extract their signal from the wormhole by “going the long way” with the speed of light, which shouldn’t take that long if both quantum computers are here on Earth.

Then again, entangling the quantum computers seems like a large technical bottleneck, and once we solve that, how is this experiment any different (apart from scale) from entangled photon experiments in quantum teleportation?

Comment #26 December 3rd, 2022 at 10:56 am

Anon #19: I think what’s going on is that there’s a continuum of QC-hype-friendliness, from people who dismiss even the most serious QC research there is, from people who literally expect Google’s lab to fall into a wormhole or something. 🙂

At each point on the continuum, people get annoyed by the atrocious hype on one side, and also annoyed by the narrow-minded sticklers on their other side. As for whether the point where I sit is a reasonable one … well, that’s for you to decide!

Comment #27 December 3rd, 2022 at 11:47 am

Scott – regarding the wormhole-in-a-lab, what would have been your sentiment about the old crummy BB84 device from the late-80’s developed by Bennett, Brassard, Smolin and friends at Yorktown Heights that could send “secret” messages a whopping distance of 32.5 cm? The turning of the Pockels cells famously made different sounds depending on the basis of the photons that Eve could have listened to instead of having to measure the qubits directly.

In 1989 Deutsch stated that the experimentalists “have created the first information processing device with capabilities that exceed those of the Universal Turing Machine.” We know so much more now and would definitely not describe the experiment as extending beyond Turing, but at least Deutsch posited that something *different* was happening in the lab at Yorktown Heights in the late 80’s than what happened at the beach in Puerto Rico where Bennett and Brassard first met to talk about what would become BB84 in the early 80’s.

Comment #28 December 3rd, 2022 at 12:11 pm

[…] There is a nice blog post by Scott Aaronson on several Sycamore matters. On quantum suppremacy Scott expresses an optimistic […]

Comment #29 December 3rd, 2022 at 12:37 pm

Everything that is run on 9 or 25 qubits of Google’s qpu can be run on a computer. That doesn’t diminish the need to demonstrate it on the qpu. QC has only just begun to explore complex quantum states. It is awesome that Google verifies that algorithms that illuminate very ‘quantum’ behaviors work on real quantum systems. What we learned was qm works for these types of complex states.

I watched the Quanta utube video. They spoke of the algorithm being of a transversable wormhole. If I understand transversable wormholes, they have no horizons but they are not a shortcut, but a long way. It is great that a team first used ai to construct a qcircuit to illustrate this effect in a minimal way and that the circuit worked.

I am very excited for the day when Google will have hardware below threshold thm’s conditions and we do learn awesome stuff about qm they we don’t know!!!

Comment #30 December 3rd, 2022 at 1:06 pm

“Yes, I expect such things to continue, unless and until the incentives change. ”

How would you change the incentives?

John Q Public has a great thirst for doom forecasts and the latest zany results from the crazy world of quantum mechanics and substituting simulation for the subject of simulation is a grand way to provide that.

There was reliance on the personal integrity of scientists even to the gallows but as the volume of boiler housed data, irreproducible results, over-the-moon hype,etc ever increases, it might be reasonable to re-examine that premise. As I have stated before you display personal integrity in the best tradition of science but many of your colleagues raise more than a reasonable doubt.

Comment #31 December 3rd, 2022 at 1:08 pm

“Gao et al. have given a polynomial-time classical algorithm for spoofing Google’s Linear Cross-Entropy Benchmark (LXEB)—but their algorithm can currently achieve only about 10% of the excess in LXEB that Google’s experiment found.”

Gao et al.’s main observation is that when you apply depolarization noise on several gates, there is still some correlation between the noisy samples and the ideal distribution (hence large LXEB), and they apply this observation to split the circuit into two distinct parts (which roughly allows computations separately on these parts). I would expect that you can get 100% (or more) of the Google’s LXEB as follows: apply the noise only to some of the gates in the “boundary”, get substantially larger correlation, and make sure that the resulting noisy circuit still has quick (perhaps a bit slower) classical algorithm. (This is related to the “patch” and “elided” circuits in Google’s 2019 paper. In the patch circuits all boundary edges are deleted and in the elided circuits only some of them are.)

Comment #32 December 3rd, 2022 at 2:28 pm

Scott #22:

thank you very much!

It seems time for me to look seriously into the fault-tolerance theorem.

Comment #33 December 3rd, 2022 at 2:30 pm

In the original ER=EPR paper (arXiv:1306.0533), Maldacena and Susskind write:

“It is very tempting to think that *any* EPR correlated system is connected by some sort of ER bridge, although in general the bridge may be a highly quantum object that is yet to be independently defined. Indeed, we speculate that even the simple singlet state of two spins is connected by a (very quantum) bridge of this type.”

To me, this is saying there is a continuum between the concept of wormhole and the concept of entanglement. All wormholes, quantum mechanically, are built from entanglement, and all entanglement corresponds to a kind of wormhole. (And presumably, e.g. all quantum teleportation lies on a continuum with traversable wormholes, etc.)

I find this an attractive and plausible hypothesis, and well-worked out in the case of entangled black holes, but still extremely vague at the other end of the continuum. What is the geometric dual of the two-spin singlet state? Does it depend on microphysical details – i.e. the dual is different if a Bell state arises in standard model physics, versus if it arises in some other possible world of string theory? Or is there some geometric property that is universally present in duals of Bell states?

As far as I know, there are still no precise answers to questions like these.

Comment #34 December 3rd, 2022 at 5:21 pm

4gravitons #20: To the extent they learned something new, it seems clear from the description that they learned it from the process of designing the compressed 9-qubit circuit, and not at all from the actual running of the circuit. By the time they’d put the latter on the actual QC, they’d already learned whatever they could.

Comment #35 December 3rd, 2022 at 5:56 pm

@Scott #24

But if Alice and Bob are uploaded into physically distant parts of a quantum computer, if they are able to meet, even ‘encrypted’ inside the wormhole, even if they can only get out the long way, there must be some kind of long-range causal connection, since Alice and Bob started out far apart, which would necessitate there existing something like a wormhole in actual physical reality to enable that.

In fact, something like this (with simple particles playing the role of Alice and Bob, across more feasible distances), might actually be a good way to experimentally test ER=EPR.

Comment #36 December 3rd, 2022 at 6:44 pm

Kudos for calling the hype what it is. Regarding your friends that do the damage, recall the advice: “Lord, protect me from my friends; I can take care of my enemies.“

Comment #37 December 3rd, 2022 at 7:31 pm

Adam Treat #16

That’s all very fair. I don’t consider Scott to be responsible by any means of this stunt. And I salute his strong rebuttals.

What happened was that I just read that paragraph I quoted and that made me recall those previous brief conversations in this blog, and then I just felt a bit frustrated at his expressed frustration with his friends.

He can befriend whoever he wants, of course, and be involved in whatever research lines he likes.

Personally, my choices would obviously be very different, since I don’t respect those people. And, indeed, I can assure you that my anger goes to them, not to Scott.

But, going back to the problem of hype, I think more needs to be done. This type of hype is new, QG inside QC. Both fields already had a difficult time with hype of their own, but this new combination is particularly worrying and dangerous, the levels of surrealism are jaw-dropping even by the standards of hype in these fields.

Comment #38 December 3rd, 2022 at 8:38 pm

> This is going to be one of the many Shtetl-Optimized posts that I didn’t feel like writing, but was given no choice but to write.

Then why didn’t you just have ChatGPT write it? XD

Comment #39 December 3rd, 2022 at 8:56 pm

bystander #36, Alex #37, etc.: Like, imagine you had a box that regularly spit out solid gold bars and futuristic microprocessors, but also sometimes belched out dense smoke that you needed to spend time cleaning up. It would still be a pretty great box, right? You’d still be lucky to have it, no?

Lenny Susskind has contributed far more to how we think about quantum gravity — to how even his critics think about quantum gravity — than all of us in this comment thread combined. If his friends sometimes have to … tone things down slightly when he gets a little too carried away with an idea, that’s a price worth paying many times over.

Comment #40 December 3rd, 2022 at 9:01 pm

Shion Arita #35:

In fact, something like this (with simple particles playing the role of Alice and Bob, across more feasible distances), might actually be a good way to experimentally test ER=EPR.

But if (as we said) you can’t get a message out from the simulated wormhole, if all the experiments that an external observer can do just yield the standard result predicted by conventional QM, then what exactly would the experimental test be?

Comment #41 December 3rd, 2022 at 9:05 pm

Gil Kalai #31: Yeah, I also think it’s plausible (though not certain) that the LXEB score achieved by Gao et al.’s spoofing algorithm can be greatly improved. Keep in mind, though, that the experimental target that algorithm is trying to hit will hopefully also be moving!

Comment #42 December 3rd, 2022 at 9:35 pm

OhMyGoodness #30:

How would you change the incentives?

I’m not sure, but I’d guess the strong, nearly-unanimous pushback that the “literal wormhole” claim has gotten in the science blogosphere has already changed the incentives, at least marginally!

Comment #43 December 3rd, 2022 at 10:29 pm

Do you think that the noisy random circuit sampling result (or ideas from it) can be used to get a polynomial time algorithm for noisy BosonSampling? Based off my first reading, it seems not immediately applicable to BosonSampling, but it seems like the ideas could be transferred to that.

Comment #44 December 3rd, 2022 at 11:56 pm

Still Figuring It Out #25:

Please correct me if I got anything wrong, but it sounds like we could do the Alice and Bob wormhole experiment in the near future. We just need to replace uploaded-brain Alice and Bob with much simpler probes (perhaps short text strings or simple computer programs?), and then extract their signal from the wormhole by “going the long way” with the speed of light, which shouldn’t take that long if both quantum computers are here on Earth.

Then again, entangling the quantum computers seems like a large technical bottleneck, and once we solve that, how is this experiment any different (apart from scale) from entangled photon experiments in quantum teleportation?

Indeed, see my comment #40. Even after your proposed experiment was done, a skeptic could say that all we did was to confirm QM yet again, by running one more quantum circuit on some qubits and then measuring the output. Nothing would force the skeptic to adopt the wormhole language: that language exists, but the whole point of duality is that it doesn’t lead to any predictions different from the usual quantum-mechanical ones. See the difficulty? 🙂

Comment #45 December 4th, 2022 at 12:02 am

Alex Fischer #43: Excellent question! While the many differences between RCS and BosonSampling make it hard to do a direct comparison, some might argue that the “analogous” classical simulation results for noisy BosonSampling were already known. See for example this 2018 paper by Renema, Shchesnovich, and Garcia-Patron, which deals with a constant fraction of lost photons, with some scaling depending on the fraction.

In any case, whatever loopholes remain in the rigorous results, I’ve personally never held out much hope that noisy BosonSampling would lead to quantum supremacy in the asymptotic limit—just like I haven’t for RCS and for similar reasons. At least in the era before error-correction, the goal, with both BosonSampling and RCS, has always been what Aharonov et al. are now calling “practical quantum supremacy.”

Comment #46 December 4th, 2022 at 12:25 am

Mark S. #27:

Scott – regarding the wormhole-in-a-lab, what would have been your sentiment about the old crummy BB84 device from the late-80’s developed by Bennett, Brassard, Smolin and friends at Yorktown Heights that could send “secret” messages a whopping distance of 32.5 cm? … In 1989 Deutsch stated that the experimentalists “have created the first information processing device with capabilities that exceed those of the Universal Turing Machine.”

While I was only 8 years old in 1989, I believe I would’ve pushed back against Deutsch’s claim … the evidence being that I’d still do so today! 🙂

In particular, while you and I know perfectly well that this isn’t what Deutsch meant, his statement (which I confirmed here by googling) is practically begging for some journalist to misconstrue it as “IBM’s QKD device violates the Church-Turing Thesis; it does something noncomputable; it can’t even be simulated by a Turing machine.” And in this business, I believe we ought to hold ourselves to a higher standard than

(1) knowing the truth, and

(2) saying things that in our and other experts’ minds convey the truth.

We should also

(3) anticipate the exciting false things that non-experts will likely take us to mean, and rule them out!

Having said that, Deutsch’s statement was on much firmer ground than the wormhole claim in at least one way. Namely, the IBM device’s ability to perform QKD—an information-processing protocol impossible in a classical universe—was ultimately a matter of observation. There was no analogue there to the metaphysical question of whether a computational simulation of a wormhole “actually brings a wormhole into physical existence.”

Comment #47 December 4th, 2022 at 5:12 am

so next step is E.Musk asking for public funding to build a net of teleporting stations is that it?

Comment #48 December 4th, 2022 at 8:23 am

Scott,

Could you explain a little more about the hope for the sweet spot with “circuit depth ~log(n)” … Now that you know this hope is dashed what does it mean for future experiments? Does this alter the trajectory of what Google will attempt next or the ability to scale up to higher qubits? Basically, I’m wondering what effect this has on the ongoing fight to prove quantum supremacy beyond all doubters…

Comment #49 December 4th, 2022 at 8:26 am

“if someone simulates a black hole on their classical computer, they don’t say they thereby “created a black hole.” Or if they do, journalists don’t uncritically repeat the claim”.

“I will die on the following hill: that once you understand the universality of computation, and how a biological neural network is just one particular way to organize a computation, the obvious, default, conservative thesis is then that any physical system anywhere in the universe that did the same computations as the brain would share whatever interesting properties the brain has: intelligent behavior (almost by definition), but presumably also sentience”.

So the rules for sentience and black holes/worm holes are different?

Comment #50 December 4th, 2022 at 8:27 am

(LK2 #11, Scott #22) Hi everybody,

1) The crucial ingredient of my argument for why quantum fault-tolerance is impossible deals with the rate of noise. My argument asserts that it will not be possible to reduce the rate of noise to the level that allows the creation of good quality quantum error-correcting codes. This is based on analyzing the (primitive; classical) computational complexity class that describes NISQ computations (with fixed error-rate). The failure, in principle, for quantum computation is also related to the strong noise-sensitivity of NISQ computations in subconstant error rates.

2) Error correlation is not part of my current argument and I don’t believe in “conspiratorially-correlated noise that will come in and violate the assumptions of the fault-tolerance theorem, and thereby prevent quantum error-correction from working even in principle.” (Again, my argument is for why efforts to reduce the rate of noise will hit a wall, even in principle.) I did study various aspects of correlated errors (mainly, before 2012) and error-correlation is still part of the picture: namely, without quantum fault-tolerance the type of errors you assume for gated entangled qubits will be manifested also for entangled qubits where the entanglement was obtained indirectly through the computation. (As far as I can see, this specific prediction is not directly related to any finding of the Google 2019 experiment.)

3) Actually, some of my earlier results showed that exotic forms of error correlation will allow quantum fault-tolerance (a 2012 paper with Kuperberg) and earlier, in 2006, I showed that if the error rate is small enough, no matter what diabolic correlations exist, log-depth quantum computation (and, in particular, Shor’s algorithm) still prevails.

4) Let me note that under the assumption of fixed-rate readout errors my simple (Fourier-based) 2014 algorithm with Guy Kindler (for boson sampling) applies to random circuit sampling and shows that approximate sampling can be achieved by low degree polynomials and hence random circuit sampling represents a very low-level computational subclass of P. I don’t know if this conclusion applies to the model from Aharonov et al.’s recent paper and this is an interesting question. (Aharonov et al. ‘s noise model is based on an arbitrarily small constant amount of depolarizing noise applied to each qubit at each step.) Aharonov et al. ‘s algorithm also seems related to Fourier expansion.

5) Scott highlights the importance of the remarkable agreement in the Google 2019 experiment between the total circuit fidelity and the gate fidelity, raised to the power of the number of gates, and refers to it as “the single most important result we learned scientifically from these experiments”. This is related to another aspect of my own current interest regarding a careful examination of the Google 2019 experiment, and it goes back, for example, to an interesting videotaped discussion regarding the evaluation of the Google claims that both Scott and I participated in Dec. 2019. As some of you may remember, I view the excessive agreement between the LXEB fidelity and the product of the individual fidelities as a reason for concern regarding the reliability of the 2019 experiment.

Comment #51 December 4th, 2022 at 8:35 am

@scott#40

Thought about it for a bit, and came up with this:

-Design a system that’s simple enough that it’s practically possible (though it probably will be difficult) to brute force ‘decrypt’ what happened inside the wormhole part of the simulation. This should work because it’s not actually ‘impossible’ to decrypt the wormhole–just exponentially difficult. So maybe there’s some kind of toy system that is complicated enough to do what we want but simple enough to be decryptable.

-Also have the computation generate some kind of hash that’s sensitive to everything that happens in it. The reason for this will become apparent later. Or rather each side of the wormhole will create its own hash, but this is a bit of a non-central detail

-Simulate this on a classical computer.

-Brute force decrypt the computation and verify that Alice and Bob had interaction inside the ‘wormhole’. Note that this does not necessitate a long-range causal connection in this case, since it’s just using large amounts of classical resources to locally simulate what the effect of a long-range causal connection would be. I guess you could also run it on a quantum computer without separated parts.

-Now run the computation on the entangled separated quantum computers, and see if each side’s hash matches the classical case. If it does, the same computation occurred. But since the parts were separated by a great distance, this means that there was some kind of long-range influence. This test should work because the hash allows us to see whether the encrypted data is the same or different, without decrypting it. You’d still have to bring both hashes together the long way to verify, but if they match it does in fact mean that the computation on the Alice side influenced the computation on Bob’s side.

Comment #52 December 4th, 2022 at 8:36 am

manorba#47

His boring machines will be upgraded to tunnel through space time.

Comment #53 December 4th, 2022 at 8:53 am

[…] The second claim is that Sycamore’s pretensions to quantum supremacy have been refuted. The latter claim is based on this recent preprint by Dorit Aharonov, Xun Gao, Zeph Landau, Yunchao Liu, and Umesh Vazirani. No one—least of all me!—doubts that these authors have proved a strong new technical result, solving a significant open problem in the theory of noisy random circuit sampling. On the other hand, it might be less obvious how to interpret their result and put it in context. See also a YouTube video of Yunchao speaking about the new result at this week’s Simons Institute Quantum Colloquium, and of a panel discussion afterwards, where Yunchao, Umesh Vazirani, Adam Bouland, Sergio Boixo, and your humble blogger discuss what it means. … (Shtetl-Optimized / Scott Aaronson) […]

Comment #54 December 4th, 2022 at 9:02 am

A giant fault tolerant qc will give control of unitary developement and collapse. In this setting we know qm. What will we learn about qm? Nothing, we know it already.

It may simulate molecules and condensed matter well enough to give advances in those fields.

It may factor large numbers or do other calcs better than computers.

It won’t answer qg questions for all the same reasons humans can’t. No data!!! It will be able to simulate various holographic models, but it can never give evidence of the truth of the holography.

Of course it is also possible that nature is such that fault tolerance cannot be achieved. Systems with many dof collapse (or if you prefer get stuck in a branch). Is there any known system of large dof that doesn’t collapse?

In qg, unitary evolution is often assumed for large dof systems. I can never believe this. It leads to awesome math but physical reality, I don’t think so. It may be important for qm to have consistant unitary evolution even if it cannot be achieved.

An observer collecting Hawking radiation would certainly collapse the wave function. I don’t believe that the other branches which are unreachable to the observer can effect the gr metric that the observer lives in. I think ER=EPR can be true only in an unrealistic situation where unitary evolution is preserved, ie no observers. A fault tolerant qc could evolve a holographic version of this. That may be interpreted as an argument against believing in fault tolerance???

Comment #55 December 4th, 2022 at 9:21 am

Scott #46 – thanks! And thanks for considering my hypothetical. I can definitely get behind your third bullet. I think that’s a fair burden to place on the media and the scientists who communicate with them.

But, perhaps in relation to the first two, the author of the Quanta article has pointed to a talk led by Harlow on hardware vs. software at the 2017 IFQ Conference. Harlow’s contention, as I could interpret, was that it’s fair to be “hardware ambivalent” about certain topics in physics, and that it’s wrong to state that certain things having certain properties are only *real* if a lump of material realizes the properties, in contrast to software running on a (quantum) computer to characterize that material and those properties. At least immediately during the conversation at the conference, you and others appeared receptive to such a position (positions might have changed or been more refined afterwards, of course).

As an example I’ve heard that the toric code is essentially “the same” as a topological quantum computer. I think this question was initially posited by someone else, but – if a transmon or ion-trap quantum computer were to successfully implement a toric code, could you defend a NYT or Quanta headline such as “Physicists use quantum computers to create new phase of matter”?

Comment #56 December 4th, 2022 at 10:38 am

Adam Treat #48:

Could you explain a little more about the hope for the sweet spot with “circuit depth ~log(n)” … Now that you know this hope is dashed what does it mean for future experiments?

OK good question. What’s special about depth log(n) is that

(1) the quantum state is sufficiently “scrambled” to get “anti-concentration” (a statistical property used in the analysis of these experiments), but

(2) even if every gate is subject to constant noise, the output distribution still has Ω(1/exp(log(n))) = Ω(1/poly(n)) variation distance from the uniform distribution, raising the prospect that a signal could be detected with only polynomially many samples.

That’s why it briefly looked like a “sweet spot,” potentially attractive for future experiments — though as far as I know, no experimental groups had actually made any concrete plans based on this idea (though of course it’s hard to say, since once you’re doing an experiment your depth is some actual number, not “O(log(n))” or whatever). Anyway, the implication is now we can tell the experimentalists never mind and not to waste their time on the large n, “logarithmic” depth RCS regime! 🙂

Comment #57 December 4th, 2022 at 10:52 am

Scott. Yet again a careful, measured and factual clarification. This time much needed, and this time with a tinge of sadness that this could even be reported. I dont know if the researchers or the people involved on the QC were given a chance to comment, but your point about how this further creates needless confusion is spot on. I wont comment on the word hype (or as Peter elegantly describes it, “publicity stunt”) since no comment is required. Thank you yet again

Comment #58 December 4th, 2022 at 11:07 am

James Cross #49: What an amusing juxtaposition! Here’s what I’d say:

We agree, presumably, that a simulated hurricane doesn’t make anyone wet … at least, anyone in our world.

By contrast, simulated multiplication just is multiplication.

Is simulated consciousness consciousness? That’s one of the most profound questions ever asked. Like I said in the passage you quoted, it seems to me that the burden is firmly on anyone who says that it isn’t, to articulate the physical property of brains that makes them conscious and that isn’t shared by a mere computer simulation.

At the least, though, we’d like a simulated consciousness to be impressive: to pass the Turing Test and so on. No one is moved by claims that a 2-line program to reverse an input string manifests consciousness.

Now we’re faced by a new question of this kind: is a simulated wormhole a wormhole? Or, maybe it’s only a wormhole for simulated people in a simulated spacetime?

However you answer that imponderable, my point was that a crude 9-qubit simulation of an SYK wormhole isn’t obviously much more impressive, conceptually or technologically, than a “simulated wormhole” that consists of a wormhole that you’d sketch with pen and paper. Or not today, anyway, when running ~9-qubit, ~100-gate circuits and confirming that they behave as expected has become routine.

Hope that clarifies!

Comment #59 December 4th, 2022 at 11:18 am

Shion Arita #51: While I didn’t follow every detail of your proposal, the question, once again, is how you’d answer a skeptic who says that you’re just doing a bunch of computations that give an answer that you yourself already knew in advance, computations that couldn’t possibly have given a different answer, rather than doing an experiment that could tell you anything about the possibility or impossibility of wormholes in our “base-level” physical spacetime.

After all, if the claim just boils down to “dude … if we created an entire simulated universe with wormholes, then that universe would have wormholes in it!”, then you didn’t need PhD physicists to help establish the claim, did you? 🙂

Comment #60 December 4th, 2022 at 11:46 am

#56 Scott

Thanks for indulging me!

I think that sentience like a black hole (and presumably a wormhole) is a natural phenomenon that can be described and simulated with computations but the abstract model simulations in neither case are the same as the phenomenon.

However, there are simulations that are different from abstract models – concrete simulations that are realized in physical materials, for example wind tunnels and wave pools which are scale models which may have characteristics that make them nearly identical to the actual phenomenon they model.

So the question about a quantum computer wormhole would be whether it is merely an abstract model or is it a concrete model. From what I can gather, it is a purely abstract model in this research.

Comment #61 December 4th, 2022 at 12:02 pm

Scott #39

“Lenny Susskind has contributed far more to how we think about quantum gravity — to how even his critics think about quantum gravity — than all of us in this comment thread combined.”

It is interesting that you wrote “to how we think about quantum gravity” instead of “to quantum gravity”. Susskind’s contributions to really understanding quantum gravity have been null, and his contributions to how (some) people think about quantum gravity have been very negative to the field: he has made people lose a lot of time with each idea he has proposed, none of which ended up quite working (and some of which, particularly the latest ones like QM=GR are sheer nonsense). His modus operandi of overhyping everything he does is not a bug but a feature: without it, his influence would have probably been much smaller.

So a better analogy would be with a box that produces shining plastic bars, but misleads some people into thinking that they’re solid golden bars, and also produces futuristic-looking microprocessors which however don’t work, and on top of that produces dense smoke as in your example. That’s Lenny Susskind as far as QG is concerned.

Comment #62 December 4th, 2022 at 12:39 pm

Mark S. #55: I confess I don’t remember what I said at Daniel’s talk in 2017—I haven’t watched the video, and my memory is blurred by the birth of my son literally 2 days later.

The way I’d put it now, though, is that given any claim to have created some new physical entity in the lab—a nonabelian anyon, a Bose-Einstein condendate, a wormhole, whatever—there are several crucial questions we should ask:

(1) What, if anything, did we learn from studying this entity that we weren’t already certain about, e.g. from theory or calculations on ordinary laptops?

(2) What, if anything, could we learn from studying it in the future?

(3) What, if anything, can the new entity be used for (including in future physics experiments)?

(4) How difficult of a technological feat was it to produce the new entity? Where does it fall on the continuum between “PR stunt, anyone could’ve done it” and “genuine, huge advance in experimental technique”?

(5) Whatever the new entity is called (“wormhole,” “anyon,” etc.), to what extent is it the thing people originally meant by that term, and to what extent is a mockup or simulation of the thing in a different physical substrate?

(6) In talking about their experiment, how clear and forthright have the researchers been in answering questions (1)-(5)—and in proactively warning the public away from exciting but false answers?

My personal feeling is that, judged by the above criteria in their entirety, not leaning exclusively on any one of them, the wormhole work gets maybe a D. Not an F, but a D. So, that’s why I’ve been negative about it, if not quite as negative as some others on the blogosphere!

Comment #63 December 4th, 2022 at 2:01 pm

Michael Janou Glaeser #61: Who if anyone, in your judgment, has made genuine contributions to understanding quantum gravity?

Comment #64 December 4th, 2022 at 3:41 pm

I also don’t see how this wormhole trick evades no-signaling. Alice writes down a message, encodes the paper into her simulation, and drops it into the wormhole. Bob encodes his brain into his own simulation, and experiences jumping into the wormhole, finding and reading Alice’s message, and then getting crushed into the singularity. How do we escape the conclusion that “the simulation of Bob recieving the message” is a property – and yes, an observable – of Bob’s quantum computer?

Comment #65 December 4th, 2022 at 3:55 pm

@scott#59

Disclaimer: I’m kind of a skeptic too to be honest and maybe I am misunderstanding Harlow’s proposed experiment. My understanding is that somehow the entangled nature of the quantum computers will allow Simulated Alice to end up meeting Simulated bob in the Simulated wormhole. But since the computers are far apart, in order for Alice’s and Bob’s data to meet, there would have to be something like a real wormhole connecting them, since what we’re calling ‘Alice’ and ‘Bob’ are actually physical states of the computer.

The point is that, if that kind of long-range wormhole-like influence isn’t possible in the real world, the computation will have different results when it’s run on the distant entangled quantum computers. Something will go wrong, and Alice and Bob won’t actually be able to meet. Thus a different hash.

The point is, if we simulate a universe with wormholes in it, yeah, it’ll have wormholes in it. But if we try to implement the simulation in such a way that it would rely on something like wormholes actually happening in the real world, if it’s not possible in the real world, it can’t work. So the computation in that case can’t have the same outcome.

Comment #66 December 4th, 2022 at 4:19 pm

maline #64 and Shion Arita #65: At some point we’re going to go around in circles, but—from an external observer’s perspective, both a “real” wormhole (formed, say, from two entangled black holes) and a “simulated” wormhole (formed, say, from two entangled quantum computers) are just some quantum systems that obey both unitarity and No superluminal signaling. No story about “Alice and Bob meeting in the middle” can ever do anything at all to change the predictions that that external observer would make by applying standard QM. All statements about “meeting in the middle” have empirical significance, at most, for the infalling observers themselves, just like in the simpler case of jumping into a single black hole. Alas, I don’t see how any amount of cleverness can get around this.

Comment #67 December 4th, 2022 at 4:42 pm

This discussion has made me realize something amusing. We are speculating about creating virtual worlds in which simulated people may have the experience of living in a geometry with wormholes, even though our physics (presumably) does not directly allow this. What if our world, analogously, was created by people who lived in a purely classical world who thought it would be amusing to create a simulation which appears from the inside to be quantum, but in the “outside reality” computes everything slowly in a classical manner, and “steps time” only when those arduous computations are complete? Our subjective time may correspond to aeons in the outside world and we would not know it.

Comment #68 December 4th, 2022 at 5:59 pm

Many people in the High Energy Theory (ie string theory, ie formal theory, etc.) community would object to the statement that SYK even has a meaningful relation to quantum gravity. Let me explain.

The classic example of AdS/CFT relates a quantum system called super-Yang-Mills (like the strong force) in four dimensions to quantum gravity (ie string theory) in 5d with negative curvature (AdS). The quantum system is labeled by two parameters: the number N of colors of the strong force, and the coupling of the strong force. When N is large, extensive evidence shows that SYM is identical to gravity in 5d (with supersymmetry, etc.), ie all corrections to gravity are suppressed by 1/N. This means that if you could simulate SYM at large N, then in principle you could simulate a wormhole in 5d. Note that this duality holds no matter the kinematic regime (eg temperature) you study SYM, as long as the label N is large.

Of course, even theoretically computing SYM at large N (including what would be needed to describe a wormhole) is super hard, and simulating it is still a distant dream.

SYK is a 1d quantum system labeled by somewhat analogous parameter N. But even at large N, it is NOT dual to gravity in 2d AdS (or near-AdS) in the standard holographic sense. Instead, when people say its dual to 2d gravity they mean there is a part of the theory that is described by 2d gravity, and this part is dominant in a certain kinematic regime (eg low temperature), but this part of the system CANNOT be distinguished from the rest of the system by ANY label of the system. This is why SYK does not have a quantum gravity dual in the standard sense. What I am saying is quite standard, e.g. if you look at the standard review of SYK by Rosenhaus (on arxiv at 1807.03334), you see in Figure 5 that in the “AdS dual” there is a big question mark, unlike SYM and other more understood cases.

You might say that I’m splitting hairs, and that SYK is just dual to quantum gravity in some new perhaps more general sense. But this general sense is so general that pretty much any conformal field theory would be dual to quantum gravity, so the statement becomes almost vacuous. Take the simplest field theory: the critical 3d Ising model. This model can be simulated classically, eg by an evaporating cup of water at a certain pressure and temperature, no need for a fancy quantum computer. Since the critical Ising model is conformal and has a stress tensor, thus formally it has an AdS description in 4d with a graviton dual to the stress tensor. There may also be kinematic regimes of the Ising model where the stress tensor dominates, which would be dual to to the graviton dominating in the bulk. But there is no label like N for the Ising model which can be dialed to gaurantee this, so its a bit silly to call the Ising model quantum gravity. You could indeed rewrite the 3d Ising model in 4d AdS variables (which has been rigorously done), but this wont necessarily teach you anything about quantum gravity.

I think its a pity some people exaggerated this result, bc it would be super cool if a wormhole could one day be simulated by a system with an actual quantum gravity dual. This wouldnt be a wormhole in our spacetime, but it would still be a big accomplishment, and could well teach us new things about quantum gravity. Exaggerating SYK and its connection to quantum gravity has done our community a disservice.

Comment #69 December 5th, 2022 at 12:37 am

Scott,

” If you had two entangled quantum computers, one on Earth and the other in the Andromeda galaxy, and if they were both simulating SYK, and if Alice on Earth and Bob in Andromeda both uploaded their own brains into their respective quantum simulations, then it seems possible that the simulated Alice and Bob could have the experience of jumping into a wormhole and meeting each other in the middle.”

There is something that bothers me about this ER = EPR thing in both its original form (wormholes) and in this simulation.

As far as I understand the black holes are entangled as long as they are built out of entangled particles. One particle goes in BH A and the other in BH B. However, Alice and Bob are not entangled, so isn’t the entanglement terminated when they jump in those BH?

Likewise, would the entanglement between those computers survive when Alice and Bob (which have some uncorrelated physical states) are uploaded?

Comment #70 December 5th, 2022 at 2:06 am

Gil Kalai (#50):

thank you very much for the post.

I’d be glad to at least understand point #1: could you please point me to your publication which addresses this?

Thank you very much.

Comment #71 December 5th, 2022 at 2:51 am

Andrei #69: No, in the scenario being discussed, I believe the number of qubits in Alice and Bob is small compared to the number of pre-entangled qubits in the two black holes. In the bulk dual description, Alice and Bob enter the two mouths of the wormhole without appreciably changing its geometry.

Comment #72 December 5th, 2022 at 6:12 am

I guess this means that even if I could pull-off the feature (described in gentzen #15) to have two quantum computers which can both read quantum input (let’s assume spin entangled photons for definiteness), and the additional feature to detect (or “post-select”) when they each got one photon from an entangled pair, I would still not be able to simulate to wormhole with “two entangled quantum computers”. I would also need some quantum memory in both computers to collect enough entangled qubits to be able to simulate the relatively large “number of pre-entangled qubits”.

I start to feel just how disappointing the actually performed quantum simulation is, even compared to the thinking described by Daniel Harlow.

Comment #73 December 5th, 2022 at 6:24 am

(LK2#11,#70, Scott)

Scott’s new notion of “practical quantum supremacy” gives me another opportunity to explain a basic ingredient of my argument. To repeat Scott’s new notion: “practical quantum supremacy” refers to a quantum noisy device that for a fixed rate of noise represents classical computation, but practically can manifest “computational supremacy” in the intermediate scale. Here “computational supremacy” is the ability to use the device to perform certain computations that are impossible or very hard for digital computers.

A basic ingredient of my argument is:

” ‘Practical quantum supremacy’ cannot be achieved”

As a matter of fact, this proposed principle applies in general and not only for quantum devices.

” Any form of ‘practical computational supremacy’ cannot be achieved.”

In other words, if you have a system or a device that represents computation in P, then you will not be able to tune some parameters of that system or device to achieve superior computations in the small or intermediate scale.

NISQ computers with constant error rates (whether it is constant readout errors or the Aharonov et al.’s errors) are, from the point of view of computational complexity, simply classical computing devices. (This is very simple for constant readout errors and it is the new Aharonov et al. ‘s result for their noise model.) Therefore, our principle implies that they cannot be used (in principle) to demonstrate practical computational supremacy.

This conclusion has far-reaching consequences: The Google experiment had 2-gate fidelity of roughly 99.5%. Pushing it to 99.9% or 99.99% may lead to convincing “practical supremacy” demonstrations. The principle we stated above implies that engineers will fail to achieve 2-gates of 99.99% quality as a matter of principle. This sounds counterintuitive, as improving the 2-gate quality is widely regarded as a “purely engineering” task. The principle I propose, namely the principle of “no practical computational supremacy” tells you, based on computational complexity considerations, that what was considered as an engineering task is actually out of reach as a matter of principle. This principle lies in the interface of physics and the theory of computing.

So let me come back to LKT#11’s questions. The answer to part 3 is “yes”. The assertion that “practical quantum supremacy” is out of reach leads to some lower limits for the quality of the components of NISQ computers. These lower limits will also not allow good quality quantum error-correction.

LK2 and Scott, I hope that this explanation helps to clarify my argument. Let me know.